Tested: Nvidia’s GeForce RTX 4090 is a content creation juggernaut

When the Nvidia GeForce RTX 4090 was announced with an eye-watering $1,600 price tag, memes spread like wildfire. While $1,600 is a little too much for most gamers to spend on a single component (most PC build budgets I see are less than that for the whole PC). I couldn’t help but be intrigued at the potential performance improvements for my work—you know, the 3D and AI-accelerated tasks I spend most of my day doing as part of managing the EposVox YouTube channel, instead of gaming.

Spoiler alert: The GeForce RTX 4090’s content creation performance is magical. In quite a few cases, the typically nonsense “2X performance increase” is actually true. But not everywhere.

Let’s dig in.

Our test setup

Most of my benchmarking was performed on this test bench:

- Intel Core i9-12900k CPU

- 32GB Corsair Vengeance DDR5 5200MT/s RAM

- ASUS ROG STRIX Z690-E Gaming Wifi Motherboard

- EVGA G3 850W PSU

- Source files stored on a PCIe gen 4 NVMe SSD

My objectives were to see how much of an upgrade the RTX 4090 would be over the previous generation GeForce RTX 3090, as well as the RTX Titan (the card I was primarily working on before). The RTX 3090 really saw minimal improvements over the RTX Titan for my use cases, so I wasn’t sure if the 4090 would really be a big leap. For some more hardcore testing later as I’ll mention, testing was done on this test bench.

- AMD Threadripper Pro 3975WX CPU

- 256GB Kingston ECC DDR4 RAM

- ASUS WRX80 SAGE Motherboard

- BeQuiet 1400W PSU

- Source files stored on a PCIe gen 4 NVMe SSD

Each test featured each GPU in the same config as to not mix results.

Video production

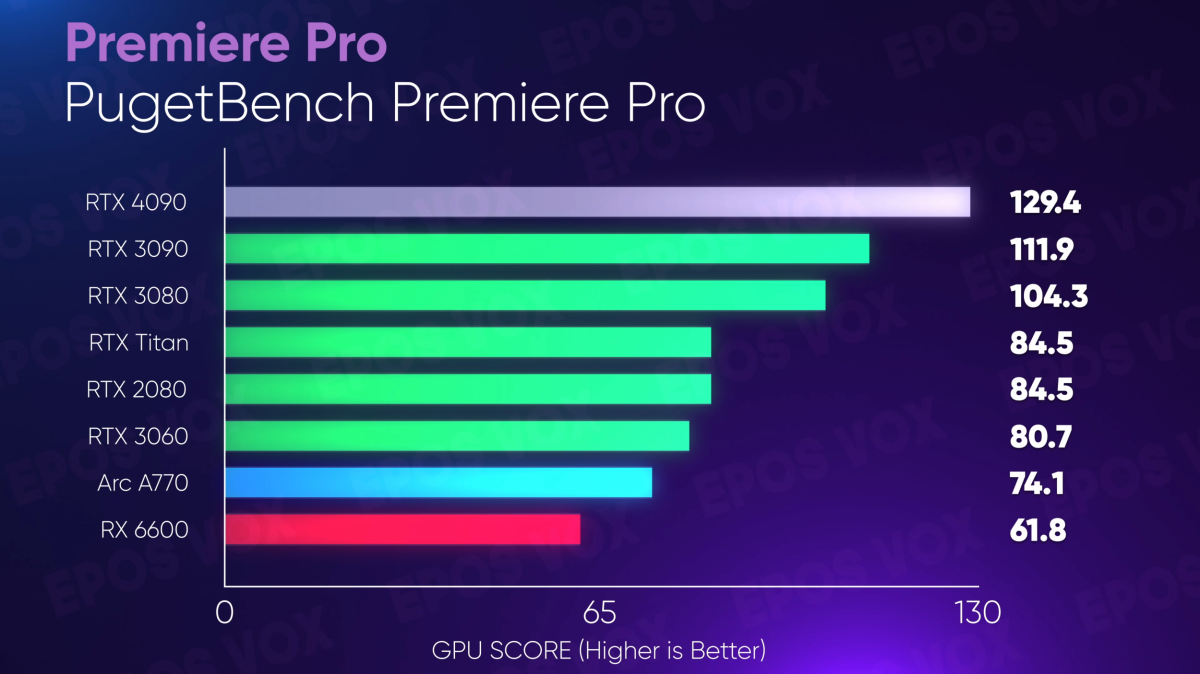

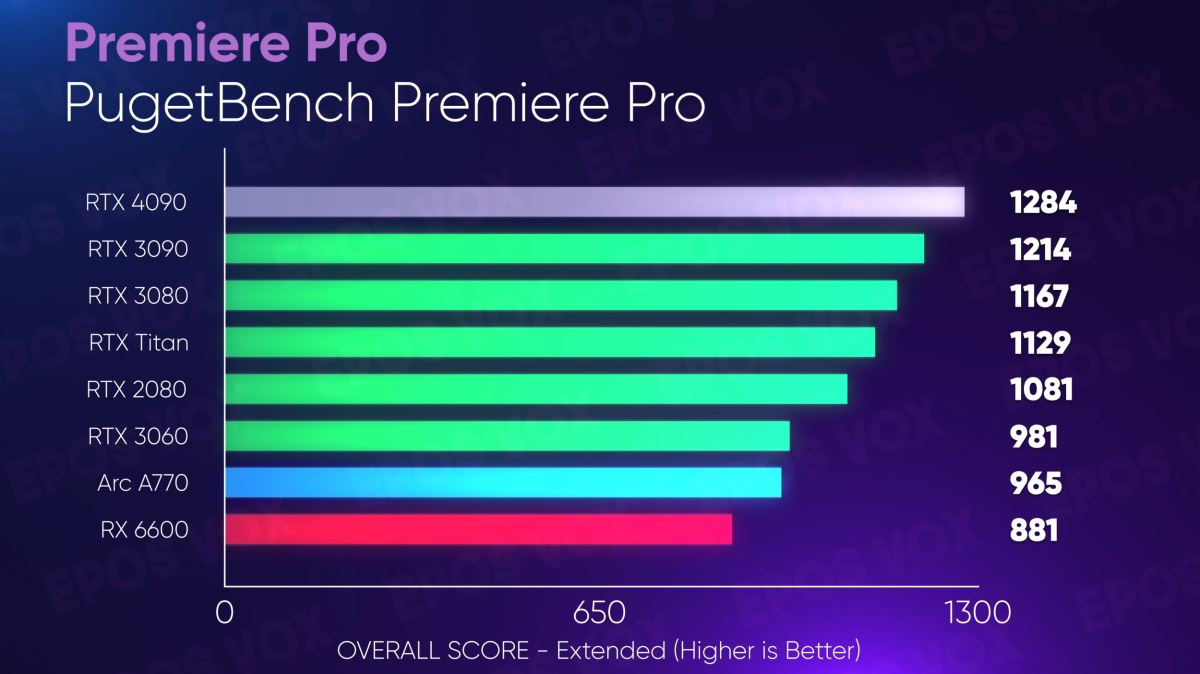

My day job is, of course, creating YouTube content, so one of the first things I had to test would be the benefits I might see for creating video content. Using PugetBench from the workstation builders at Puget Systems, Adobe Premiere Pro sees very minimal performance improvement with the RTX 4090 (as to be expected at this point).

Adam Taylor/IDG

Adam Taylor/IDG

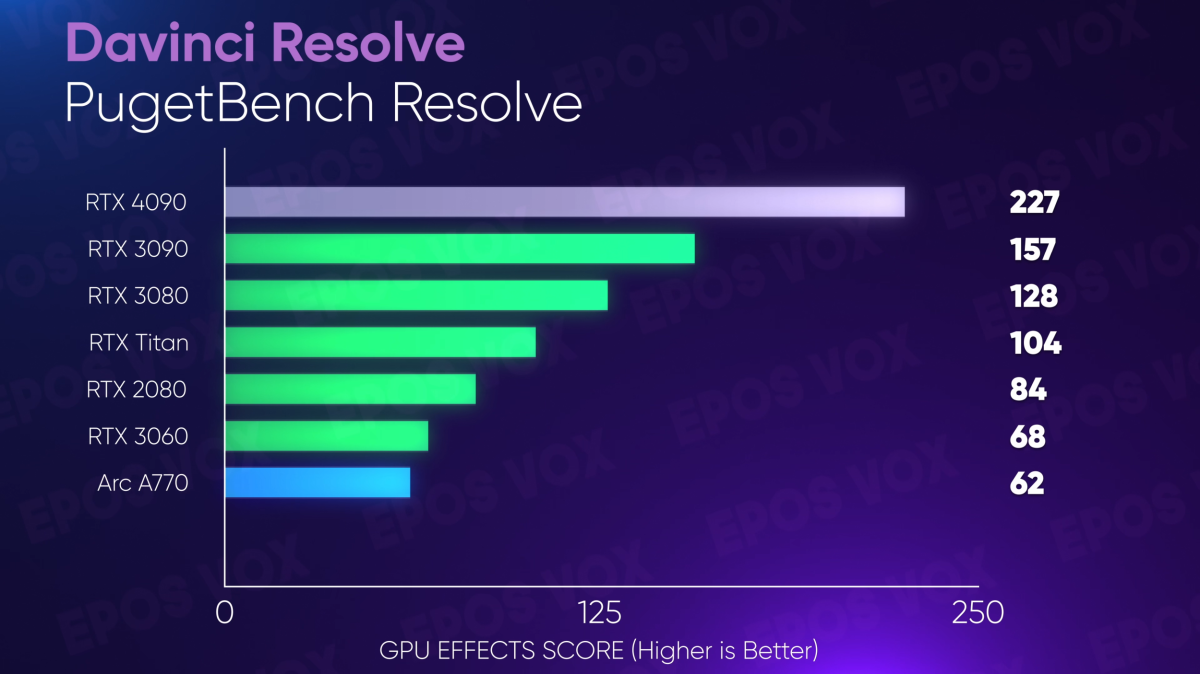

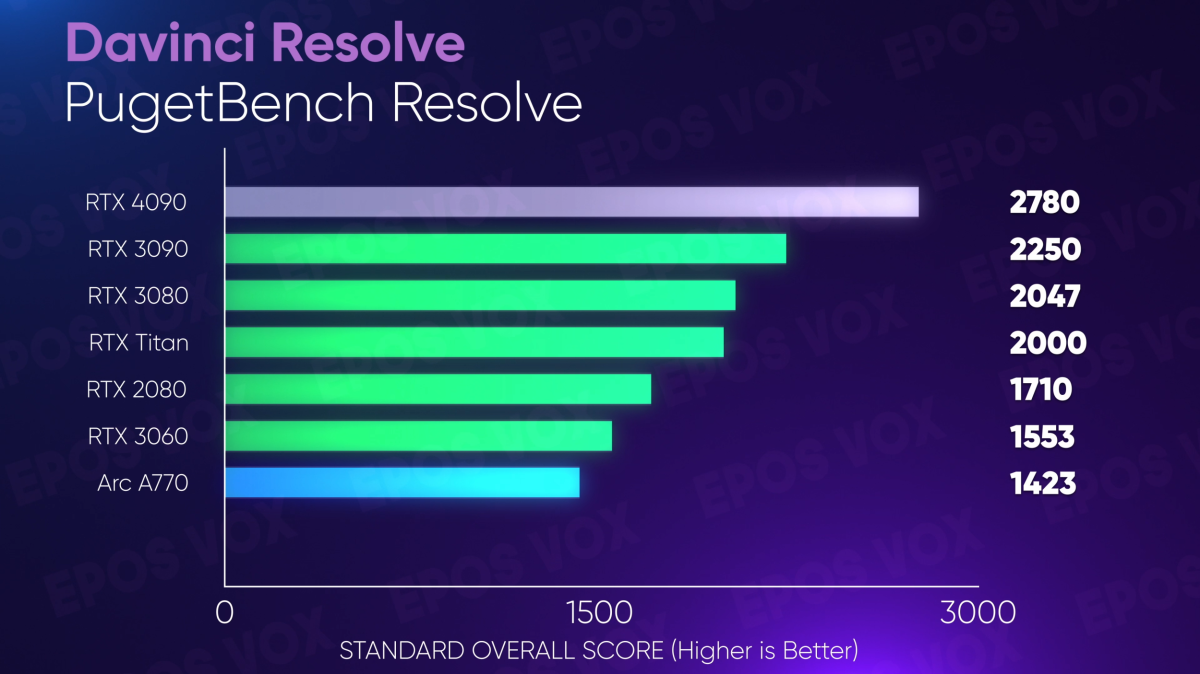

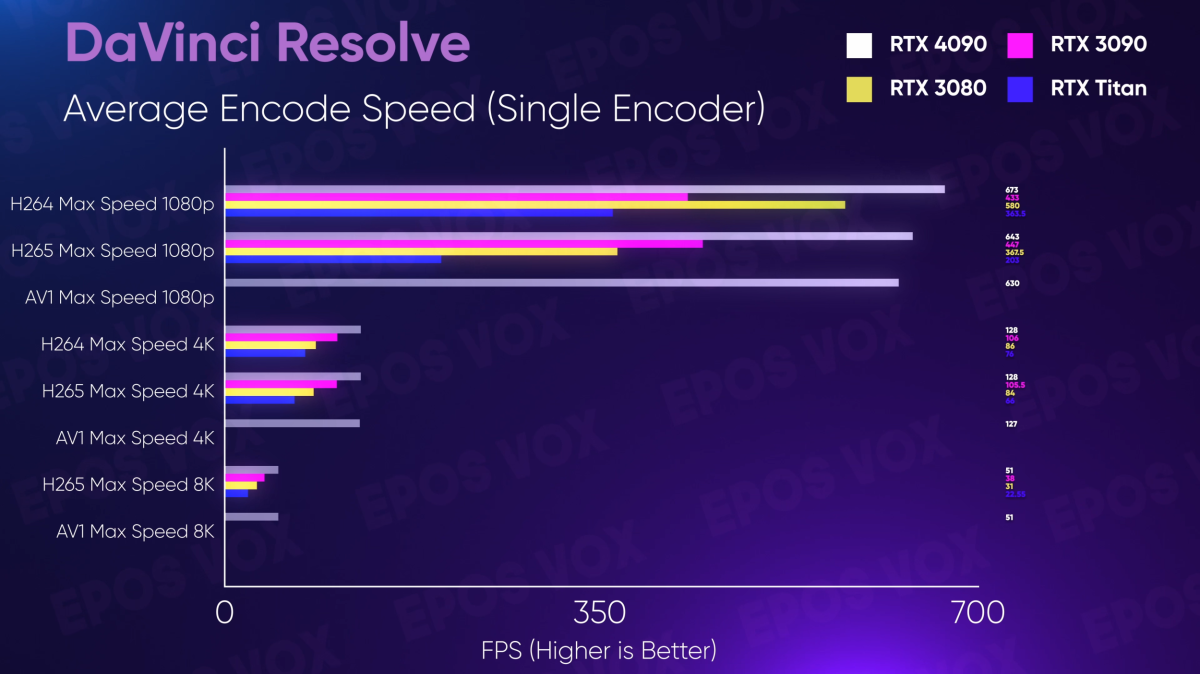

BlackMagic DaVinci Resolve, however, saw significant performance improvements across the board over both the RTX 3090 and RTX Titan. This makes sense, as Resolve is far more optimized for GPU workflows than Premiere Pro. Renders were much faster thanks both to the higher 3D compute on effects, but also the faster encoding hardware onboard—and the general playback and workflow was much more “snappier” feeling and responsive.

Adam Taylor/IDG

Adam Taylor/IDG

I’ve been editing with the GeForce RTX 4090 for a few weeks now, and the experience has been great—though I had to revert back to the public release of Resolve so I haven’t been able to export using the AV1 encoder for most of my videos.

Adam Taylor/IDG

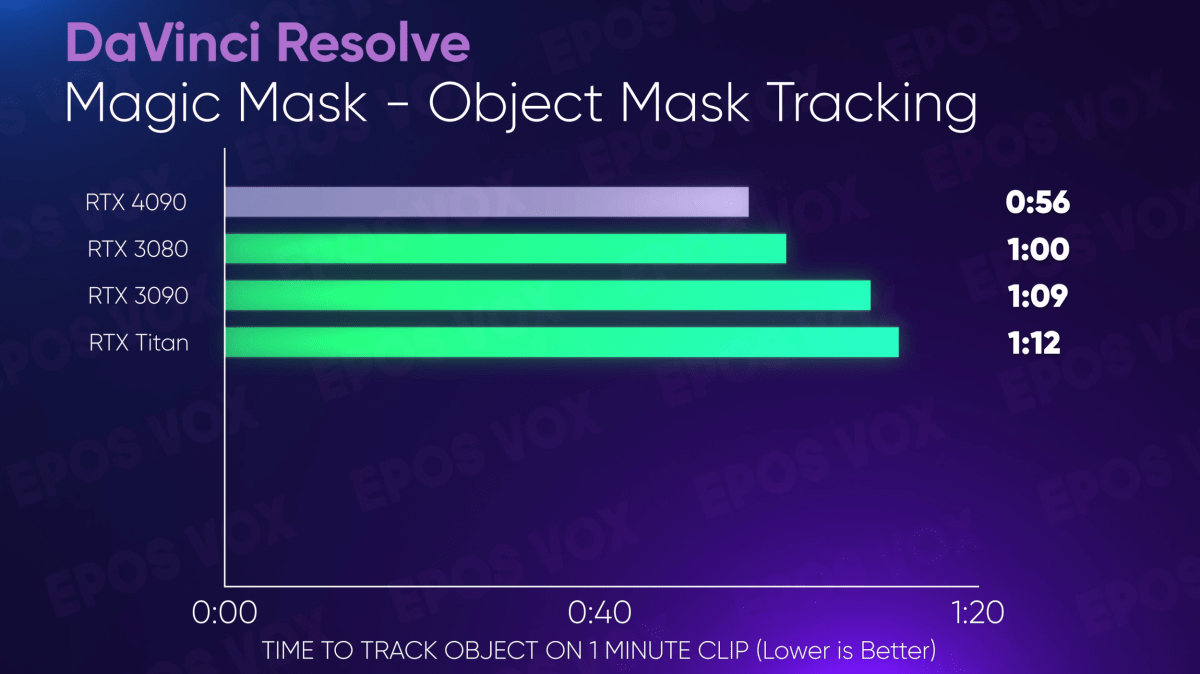

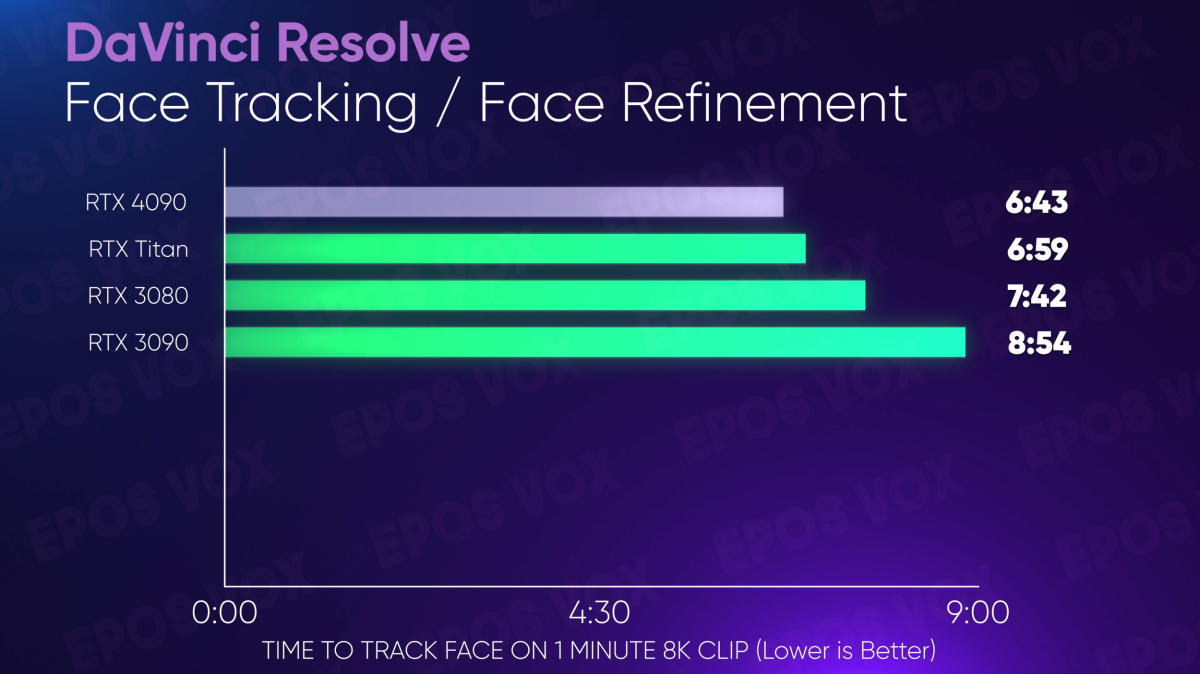

I also wanted to test to see if the AI hardware improvements would benefit Resolve’s Magic Mask tool for rotoscoping, or their face tracker for the Face Refinement plugin. Admittedly, I was hoping to see more improvements from the RTX 4090 here, but there is an improvement, which saves me time and is a win. These tasks are tedious and slow, so any minutes I can shave off makes my life easier. Perhaps in time more optimization can be done specific to the new architecture changes in Lovelace (the RTX 40-series’ underlying GPU architecture codename).

Adam Taylor/IDG

Adam Taylor/IDG

The performance in my original Resolve benchmarks impressed me enough that I decided to build a second-tier test using my Threadripper Pro workstation; rendering and exporting a 8K video with 8K RAW source footage, lots of effects and Super Scale (Resolve’s internal “smart” upscaler) on 4K footage, etc. This project is no joke, the normal high-tier gaming cards just errored out because their lower VRAM quantities couldn’t handle the project— this bumps the RTX 3060, 2080, and 3080 out of the running. But putting the 24GB VRAM monsters to the test, the RTX 4090 exported the projects a whole 8 minutes faster than the test. Eight minutes. That kind of time scaling is game changing for single person workflows like mine.

Adam Taylor/IDG

If you’re a high res or effect-heavy video editor, the RTX 4090 is already going to save you hours of waiting and slower working, right out of the gate and we haven’t even talked about encoding speeds yet.

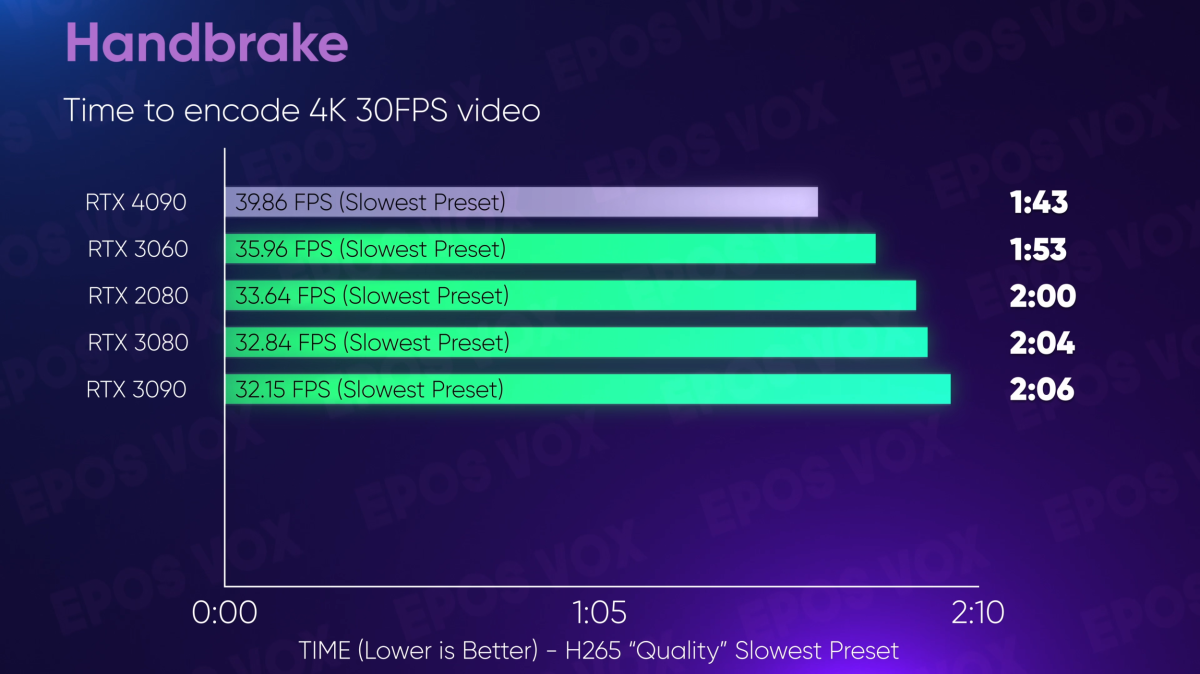

Video encoding

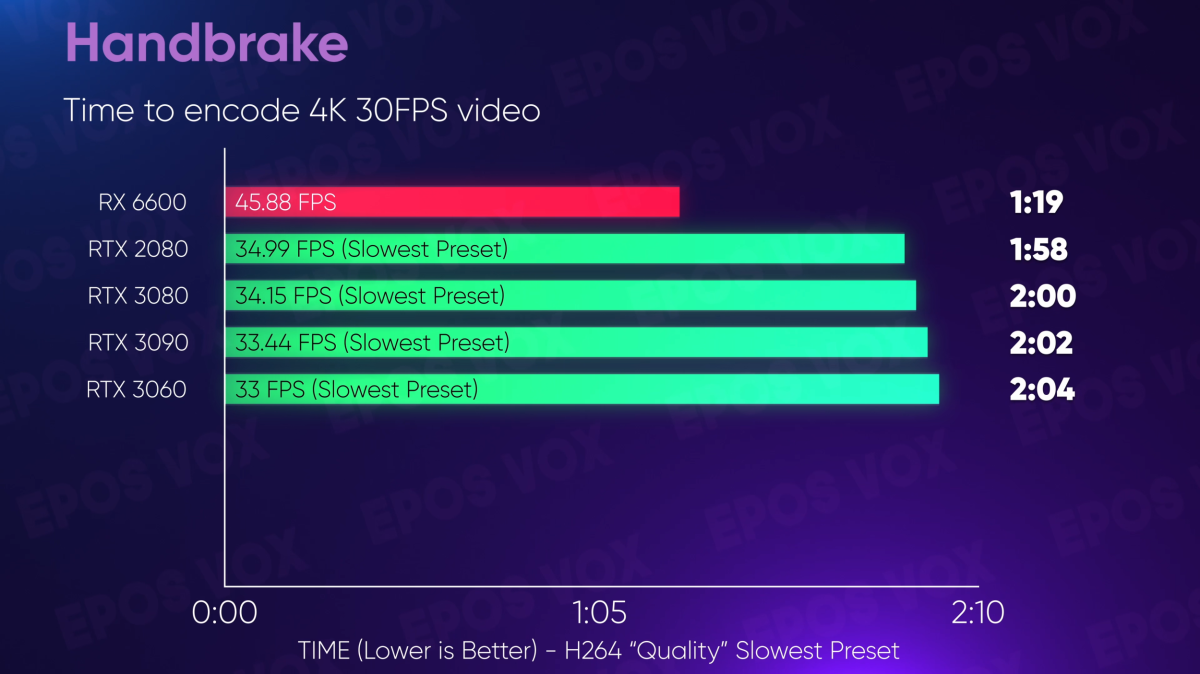

For simply transcoding video in H.264 and H.265, the GeForce RTX 4090 also just runs laps around previous Nvidia GPUs. H.265 is the only area where AMD out-performs Nvidia in encoder speed (though not necessarily quality) as ever since the Radeon 5000 GPUs, AMD’s HEVC encoder has been blazing fast.

Adam Taylor/IDG

The new Ada Lovelace architecture also comes with new dual encoder chips that individually already run a fair bit faster for H.264 and H.265 encoding than Ampere and Turing, but they also encode AV1—the new, open-source video codec from the Alliance for Open Media.

Adam Taylor/IDG

AV1 is the future of web-streamed video, with most major companies involved in media streaming also being members of the consortium. The goal is to create a highly efficient (as in, better quality per bit) video codec that can meet the needs of the modern high resolution, high frame rate, and HDR streaming world, while avoiding the high licensing and patent costs associated with H.265 (HEVC) and H.266 codecs. Intel was first to market with hardware AV1 encoders with their Arc GPUs as I covered for PCWorld here—now Nvidia brings it to their GPUs.

I cannot get completely accurate quality comparisons between Intel and Nvidia’s AV1 encoders yet due to limited software support. From the basic tests I could do, Nvidia’s AV1 encodes are on par with Intel’s—but I have since found out that the encoder implementations in even the software I can use them in both could use some fine-tuning to best represent both sides.

Performance-wise, AV1 performs about as fast as H.265/HEVC on the RTX 4090. Which is fine. But the new dual encoder chips allow both H.265 and AV1 to be used to encode 8K60 video, or just faster 4K60 video. They do this by splitting up the video frames into horizontal halves, encoding the halves on the separate chips, and then stitching back together before finalizing the stream. This sounds like how Intel’s Hyper Encode was supposed to work—Hyper Encoder instead separating GOPs (Group of Pictures or frames) among the iGPU and dGPU with Arc—but in all of my tests, I only found Hyper Encode to slow down the process, rather than speeding it up. (Plus it didn’t work with AV1.)

Streaming

As a result of the aforementioned improvements in encoder speed, streaming and recording your screen, camera, or gameplay is a far, far better experience. This comes with an update to the NVENC encoder SDK within OBS Studio, now presenting users with 7 presets (akin to X264’s “CPU Usage Presets”) scaling from P1 being the fastest/lowest quality to P7 being the slowest/best quality. In my testing in this video, P6 and P7 were basically the exact same result on RTX 2000, 3000, and 4000 GPUs, and competed with X264 VerySlow in quality.

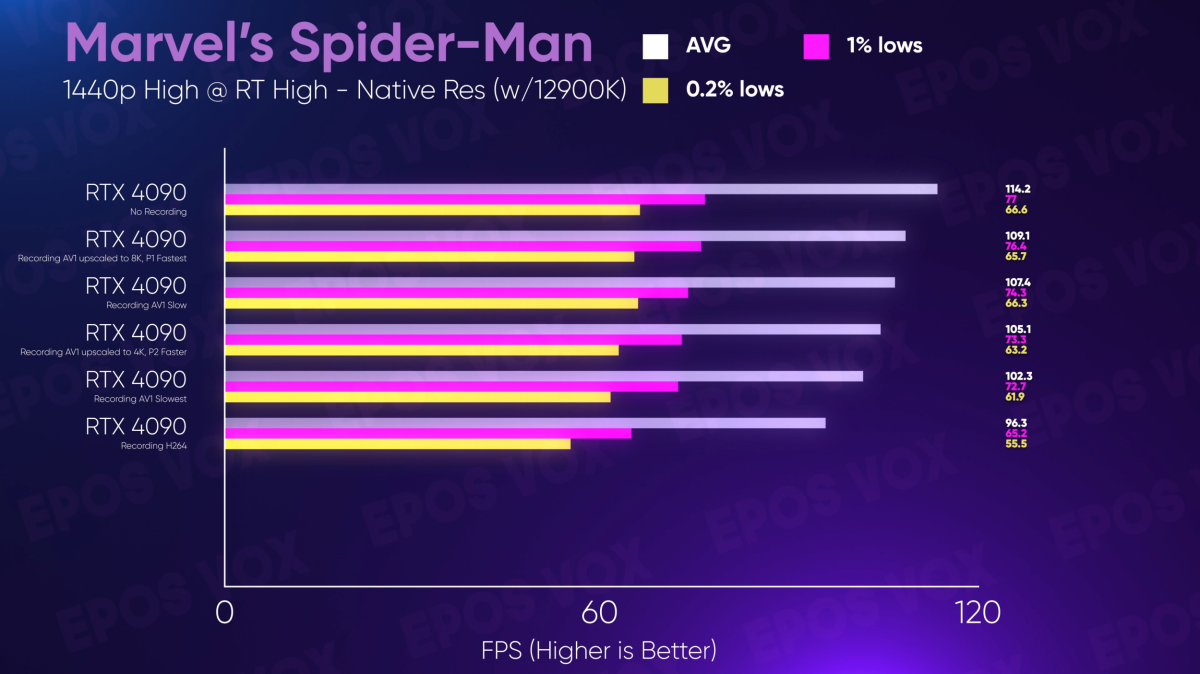

While game streaming, I saw mostly the same performance recording as other GPUs in Spider-Man Remastered (though other games will see more benefits) with H.264, but then encoding with AV1… had negligible impact on game performance at all. It was virtually transparent. You wouldn’t even know you were recording, even on the highest quality preset. I even had enough headroom to set OBS to an 8K canvas and upscale my 1440p game capture to 8k within OBS and record using the dual encoder chips, and still not see a significant impact.

Adam Taylor/IDG

Unfortunately, while Nvidia’s Shadowplay feature does get 8K60 support on Lovelace via the dual encoders, only HEVC is supported at this time. Hopefully AV1 can be implemented—and supported for all resolutions, as HEVC only works for 8K or HDR as is—soon.

I also found that the GeForce RTX 4090 is now fast enough to do completely lossless 4:4:4 HEVC recording at 4K 60FPS—something prior generations simply cannot do. 4:4:4 chroma subsampling is important for maintaining text clarity and for keeping the image intact when zooming in on small elements like I do for videos, and at 4K it’s kind of been a “white whale” of mine, as the throughput on RTX 2000/3000 hasn’t been enough or the OBS implementation of 4:4:4 isn’t optimized enough. Unfortunately 4:4:4 isn’t possible in AV1 on these cards, at all.

Photo editing

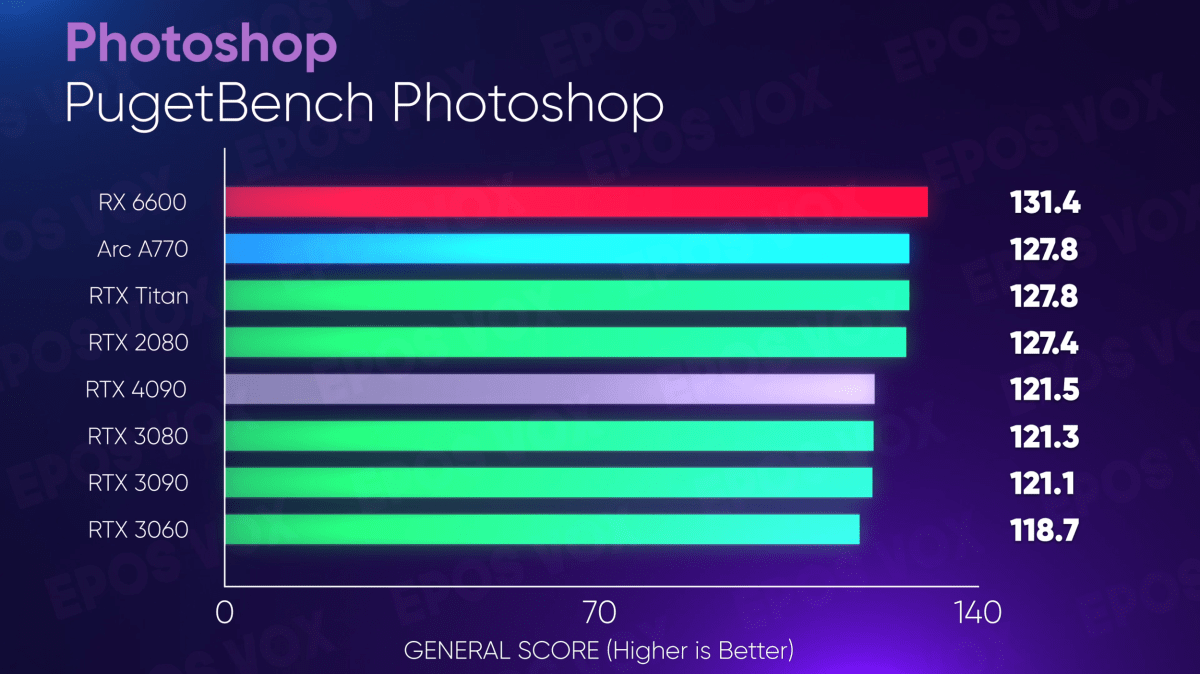

Photo editing sees virtually zero improvement on the GeForce RTX 4090. There’s a slight score increase on the Adobe Photoshop PugetBench tests versus previous generations, but nothing worth buying a new card over.

Adam Taylor/IDG

Adam Taylor/IDG

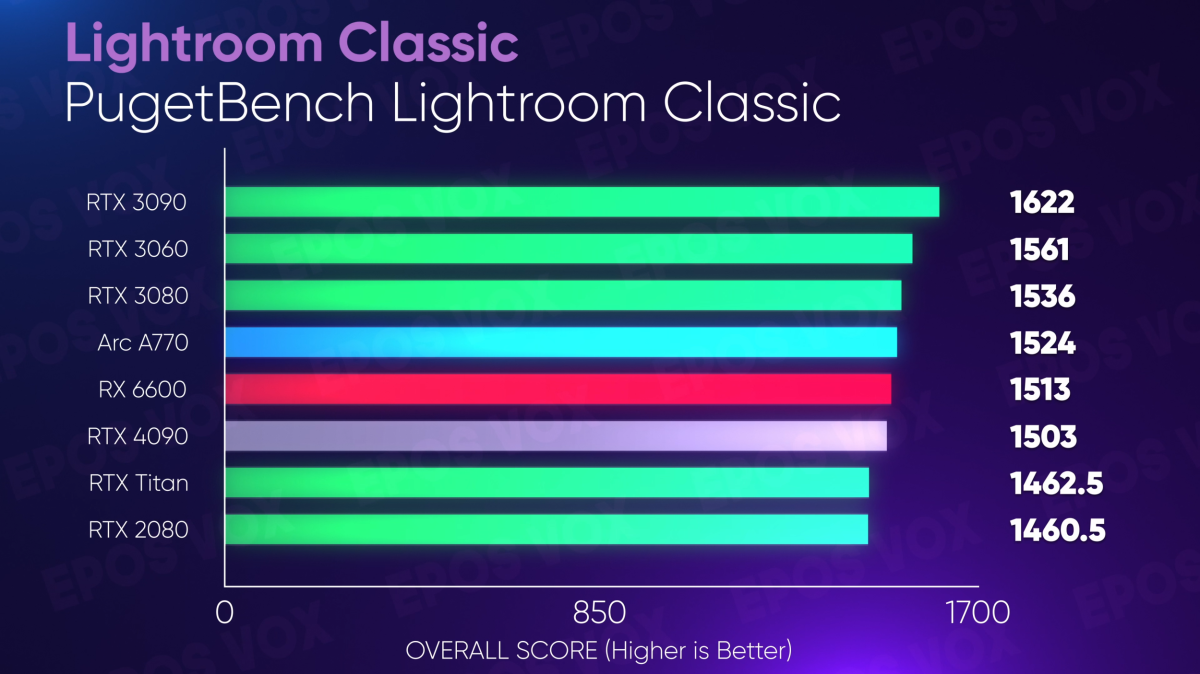

Same goes for Lightroom Classic. Shame.

Adam Taylor/IDG

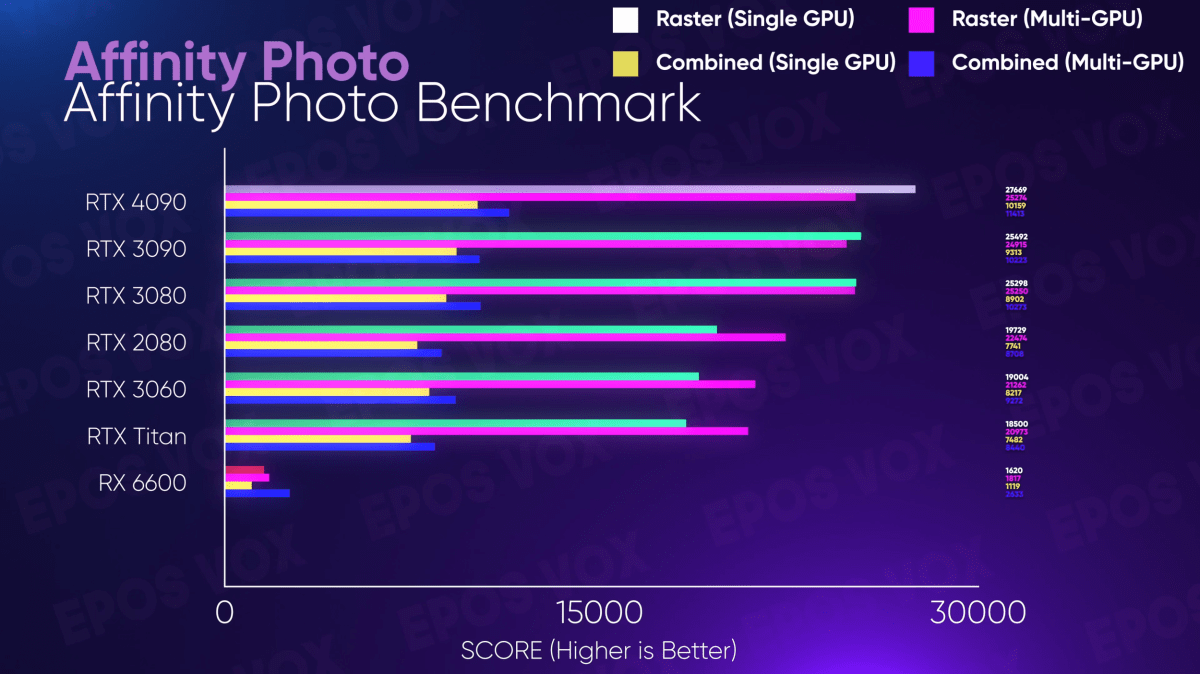

But if you’re an Affinity Photo user, the RTX 4090 far outperforms other GPUs, I’m not sure whether to interpret that Affinity is more or less optimized in this case.

A.I.

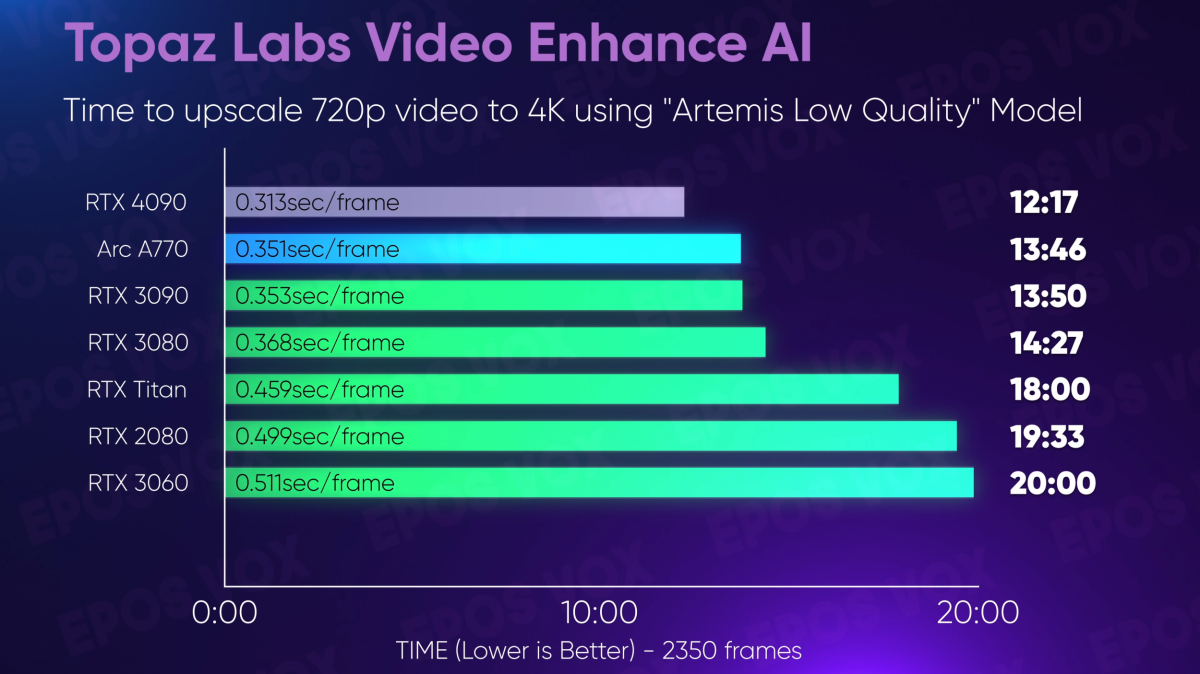

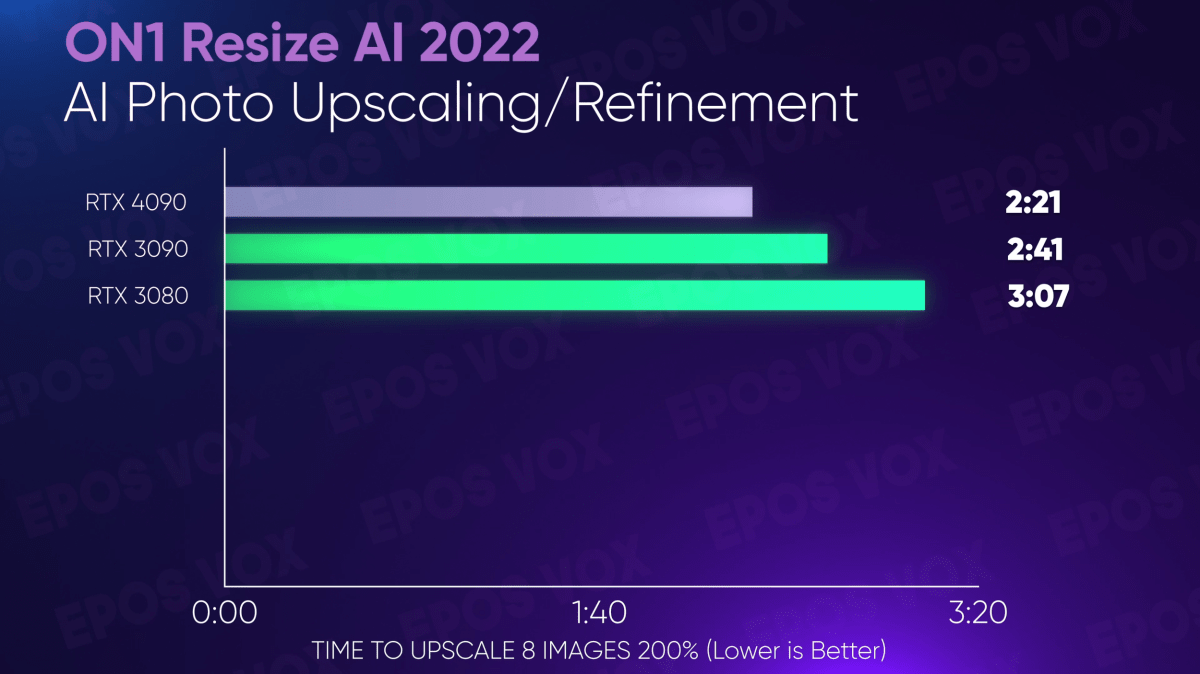

AI is all the rage these days, and AI upscalers are in high demand right now. Theoretically, the GeForce RTX 4090’s improved AI hardware would benefit these workflows—and we mostly see this ring true. The RTX 4090 tops the charts for fastest upscaling in Topaz Labs Video Enhance AI and Gigapixel, as well as ON1 Resize AI 2022.

Adam Taylor/IDG

Adam Taylor/IDG

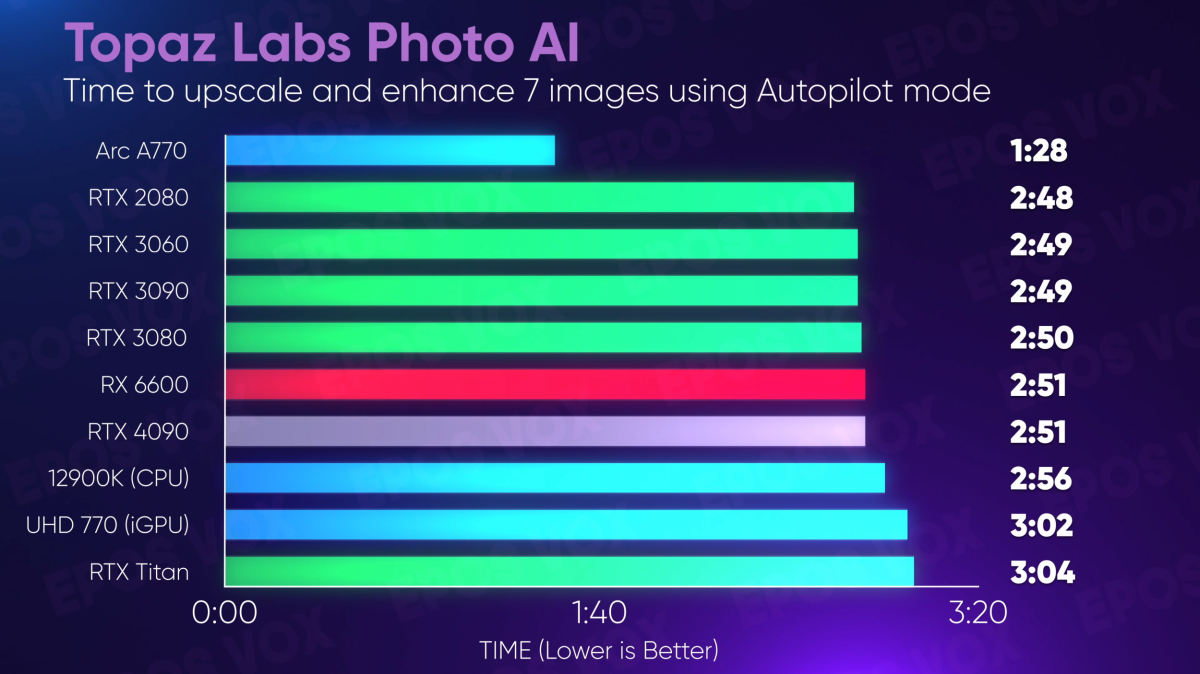

But Topaz’s new PhotoAI app sees weirdly low performance in all Nvidia cards. I’ve been told this may be a bug, but a fix has yet to be distributed.

Adam Taylor/IDG

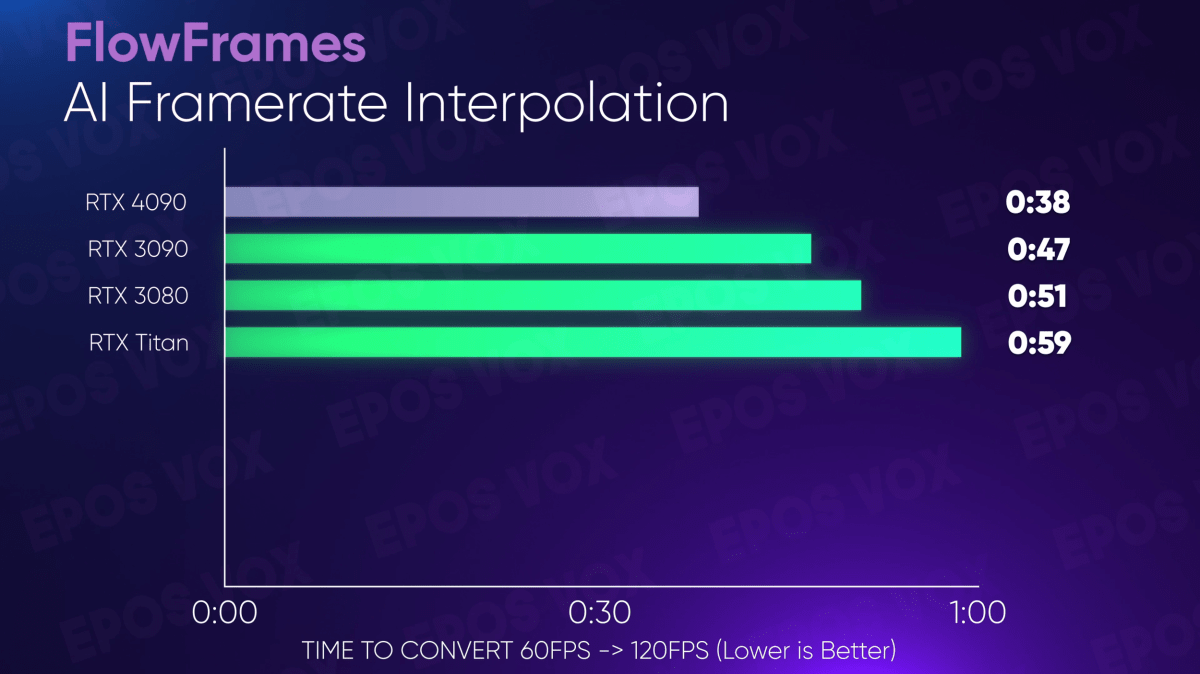

Using FlowFrames to AI interpolate 60FPS footage to 120FPS for slow-mo usage, the RTX 4090 sees a 20 percent speed-up compared to the RTX 4090. This is nice as it is, but I’ve been told by users in the FlowFrames Discord server that this could theoretically scale more as optimizations for Lovelace are developed.

Adam Taylor/IDG

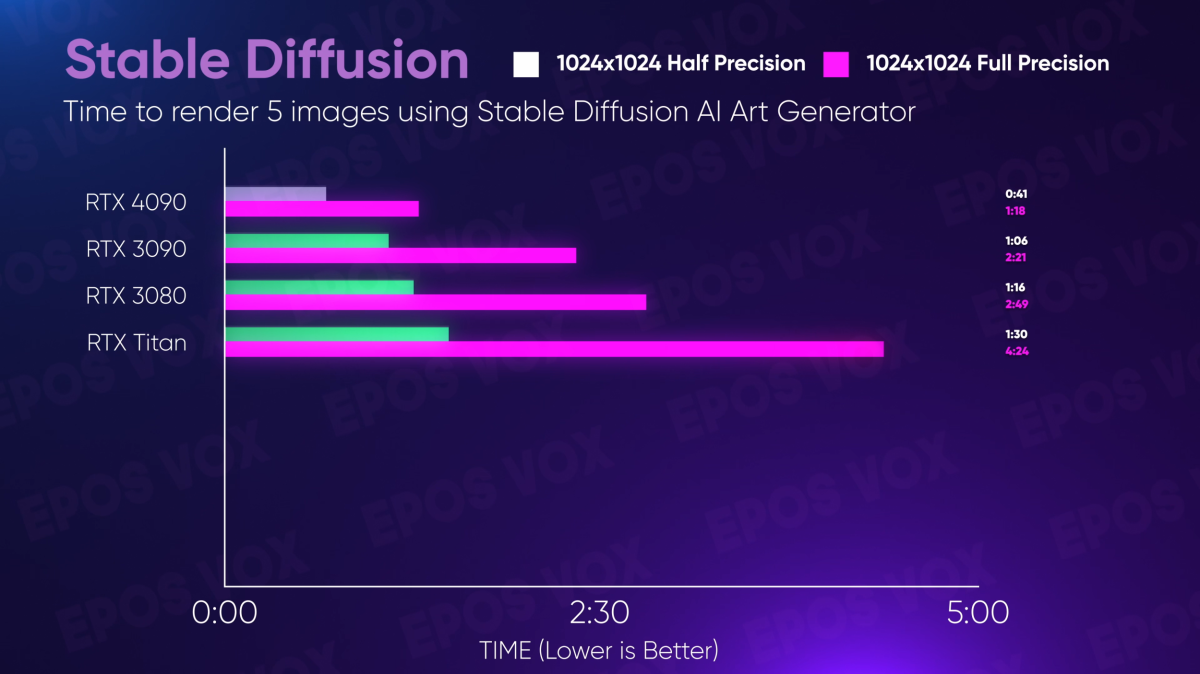

What about generating AI Art? I tested N00mkrad’s Stable Diffusion GUI and found that the GeForce RTX 4090 blew away all previous GPUs in both half and full-precision generation—and once again have been told the results “should be” higher, even. Exciting times.

Adam Taylor/IDG

3D rendering

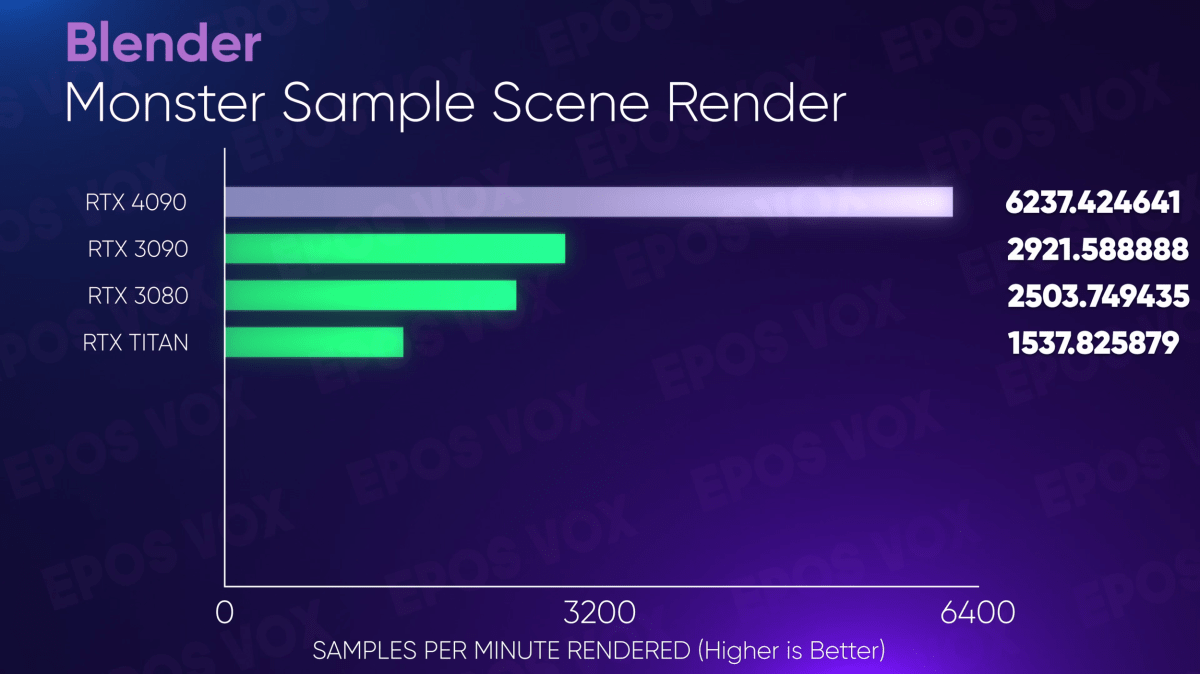

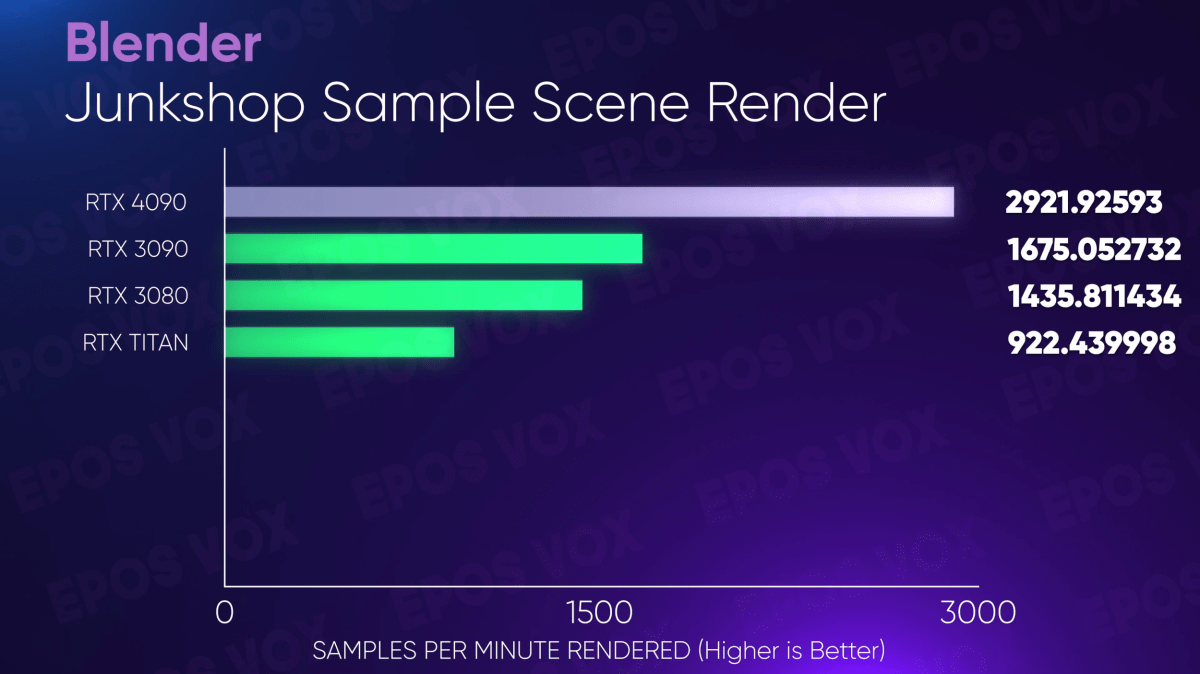

Alright, the bold “2X Faster” claims are here. I wanted to test 3D workflows on my Threadripper Pro rig, since I’ve been getting more and more into these new tools in 2022.

Adam Taylor/IDG

Adam Taylor/IDG

Testing Blender, both the Monster and Classroom benchmark scenes have the RTX 4090 rendering twice as fast as the RTX 3090, with the Junkshop scene rendering just shy of 2X faster.

Adam Taylor/IDG

This translates not only to faster final renders—which at scale is absolutely massive—but a much smoother creative process as all of the actual preview/viewport work will be more fluid and responsive, too, and you can more easily preview the final results without waiting forever.

Adam Taylor/IDG

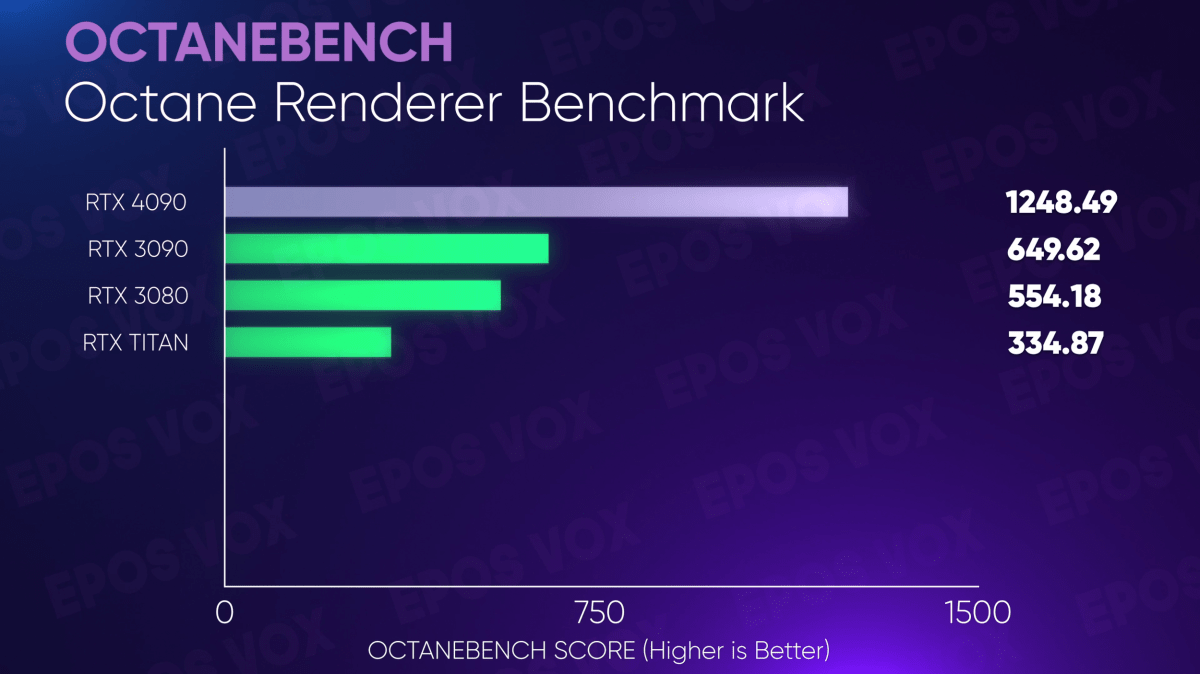

Benchmarking Octane—a renderer used by 3D artists and VFX creators in Cinema4D, Blender, and Unity—again has the RTX 4090 running twice as fast as the RTX 3090.

Adam Taylor/IDG

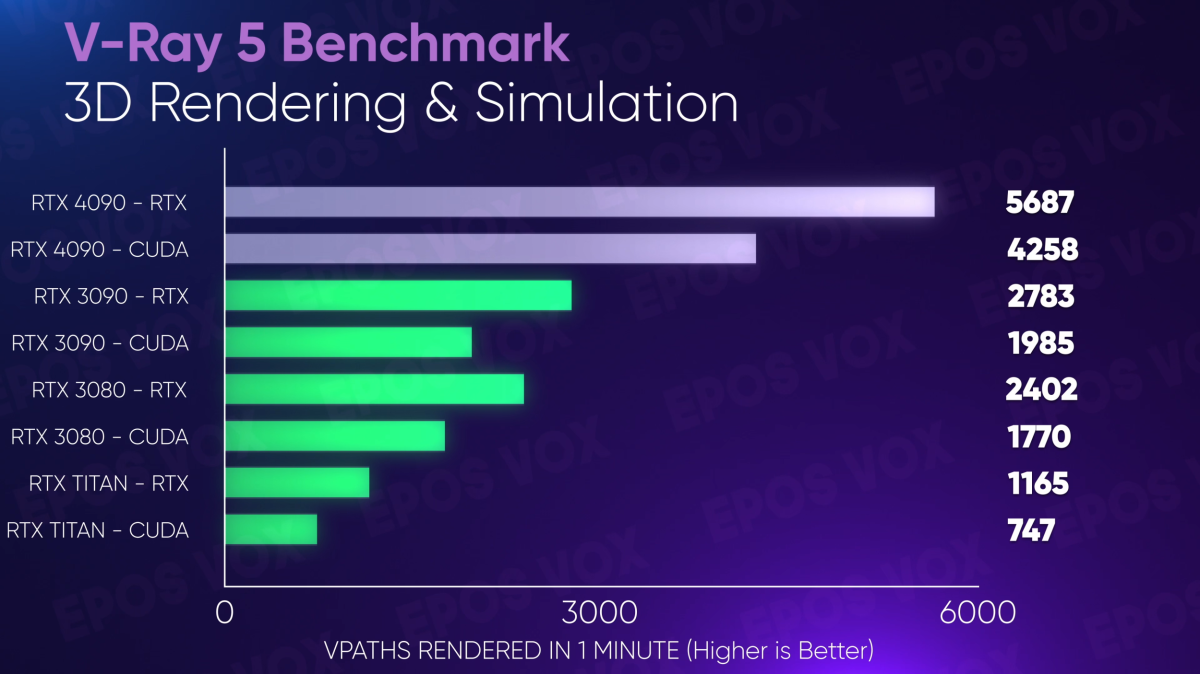

…and again, the same goes for V-Ray in both CUDA and RTX workflows.

Bottom line: The GeForce RTX 4090 offers outstanding value to content creators

That’s where the value is. The Titan RTX was $2,500, and it was already phenomenal to get that performance for $1,500 with the RTX 3090. Now for $100 more, the GeForce RTX 4090 runs laps around prior GPUs in ways that have truly game-changing impacts on workflows for creators of all kinds.

This might explain why the formerly-known-as-Quadro line of cards got far less emphasis over the past few years, too. Why buy a $5,000+ graphics card when you can get the same performance (or more, Quadros were never super fast, they just had lots of VRAM) for $1,600?

Obviously the pricing of the $1,200 RTX 4080 and recently un-launched $899 4080 12GB can still be concerning until we see independent testing numbers, but the GeForce RTX 4090 might just be the first time marketing has boasted of “2x faster” performance on a product and I feel like I’ve actually received that promise. Especially for results within my niche work interests instead of mainstream tools or gaming? This is awesome.

Pure gamers probably shouldn’t spend $1,600 on a graphics card, unless feeding a high refresh rate 4K monitor with no compromises is a goal. But if you’re interested in getting real, nitty-gritty content creation work done fast, the GeForce RTX 4090 can’t be beat—and it’ll make you grin from ear to ear during any late-night Call of Duty sessions you hop into, as well.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.