Runway Gen-2 supercharges AI video generation — and I tried it

Apps like Dall-E 2, Stable Diffusion, and Midjourney allowed us to convert a text description into a detailed image. Then ChatGPT let us spin a story from a prompt. Now, Runway Gen-2 is combining both aspects — creating moving images to tell a story.

But they are very short stories, limited to four seconds. And the videos often produce freaky, surrealist effects that were not called for in the prompt: a ball of yarn with a wagging tail, a cat whose body melts away, a horse with a bicycle wheel.

Yes, these are the early days of generative AI synthetic, but things are progressing fast. Runway Gen 1 could only apply AI effects to a traditionally produced video shot or generated elsewhere. Gen-2 creates a video from mere conjuring, and with greater detail and style than rival apps such as Gencraft, ModelScope Text to Video Synthesis, and Vercel.

Still, at this point, Runway is essentially a tech preview or proof of concept — far from a fully-formed product that can produce professional-grade results. And you can try it out for free, with an allotment of credits for 25 seconds of video before you have to start paying. (Check the end of the article for details of the convoluted pricing scheme.)

I tested Gen-2 against its rivals using a range of prompts. It started with the no-brainer of the internet — a cat — and progressed to more complex, detailed descriptions of the subjects, their movement, and the cinematic style. Gen-2 always had the finest detail and generally the most stylized shots, but also some of the weirdest effects.

Runway Gen-2 test results

The prompt “orange tabby cat” yielded several high-res clips with fine detail in the fur and whiskers and a dreamy look — with subtle, smooth movements in the cat’s face, trees swaying in the breeze in the background, and shifting light and shadow. The 16:9 widescreen dimensions and 1080p resolution lent additional cinematic effect. Rival apps yield lower-resolution, square images with a more cartoony or sketched appearance, although Gencraft sometimes came close in its detail and attractive composition. (Unlike other apps that produce MP4 videos, Vercel generated GIFs; but common video-editing apps can turn them into proper video files.)

However, Runway Gen-2 was by far the most likely to go off the rails. In one case, it showed both a well-formed cat and a freakish Tabby-colored blob. In another, the app rendered twin Tabbies. And it gets weirder. For instance, we pushed the apps by ramping up to a very complex prompt: “orange tabby cat on a white carpet rolling a pink ball of yarn, warm lighting, realistic.” In one attempt, part of the cat’s body disappeared. In another, the cat was completely missing, and a human hand emerged from the ball of yarn. Rival apps had no such hallucinations.

But every app benefitted from multiple attempts–since generative AI yields a different result every time you try. (I gave every program three shots on each test, and judged them on their best attempts. I also adjusted the apps to the highest quality settings for features such as framerate.)

Surrealism aside, Gen-2 consistently impressed with its ambition. After the cat, I tried another generative AI meme: “astronaut riding a horse.” The app produced a stunning scene, with ominous dark clouds swirling over a star-filled background and the horse majestically swerving towards the camera. The astronaut wore a trim, slick spacesuit reminiscent of SpaceX garb, but he had on a traditional, open-face jockey helmet that wouldn’t serve him well in hard-vacuum (two other attempts had sealed helmets.) Videos from Gencraft, ModelScope, and Vercel were far more cartoony.

Of course, we also had to test the prompt “will smith eating spaghetti,” which blew up the memeverse in March, when the free ModelScope app program showed the power of generative AI video. None of these apps have any sense of table manners, though, as they all show the Fresh Prince stuffing his mouth without utensils. (I cleaned things up by adding “with a fork” to a subsequent prompt.) ModelScope birthed this meme, but it had a rocky go of it this time. The first two attempts showed only a bowl of spaghetti, without Mr. Smith. He appeared on the third try in relatively good detail. But Runway ML was once again far ahead in its detailed, stylized imagery — although the character didn’t always look quite like Will Smith.

Other apps produced a more animated diner, however, sometimes chomping away like it’s his first meal in weeks, but they all showed a lot of distortions in his face.

Videos from rivals were almost always far more animated than Runway’s creations, which were more atmospheric than action-packed.

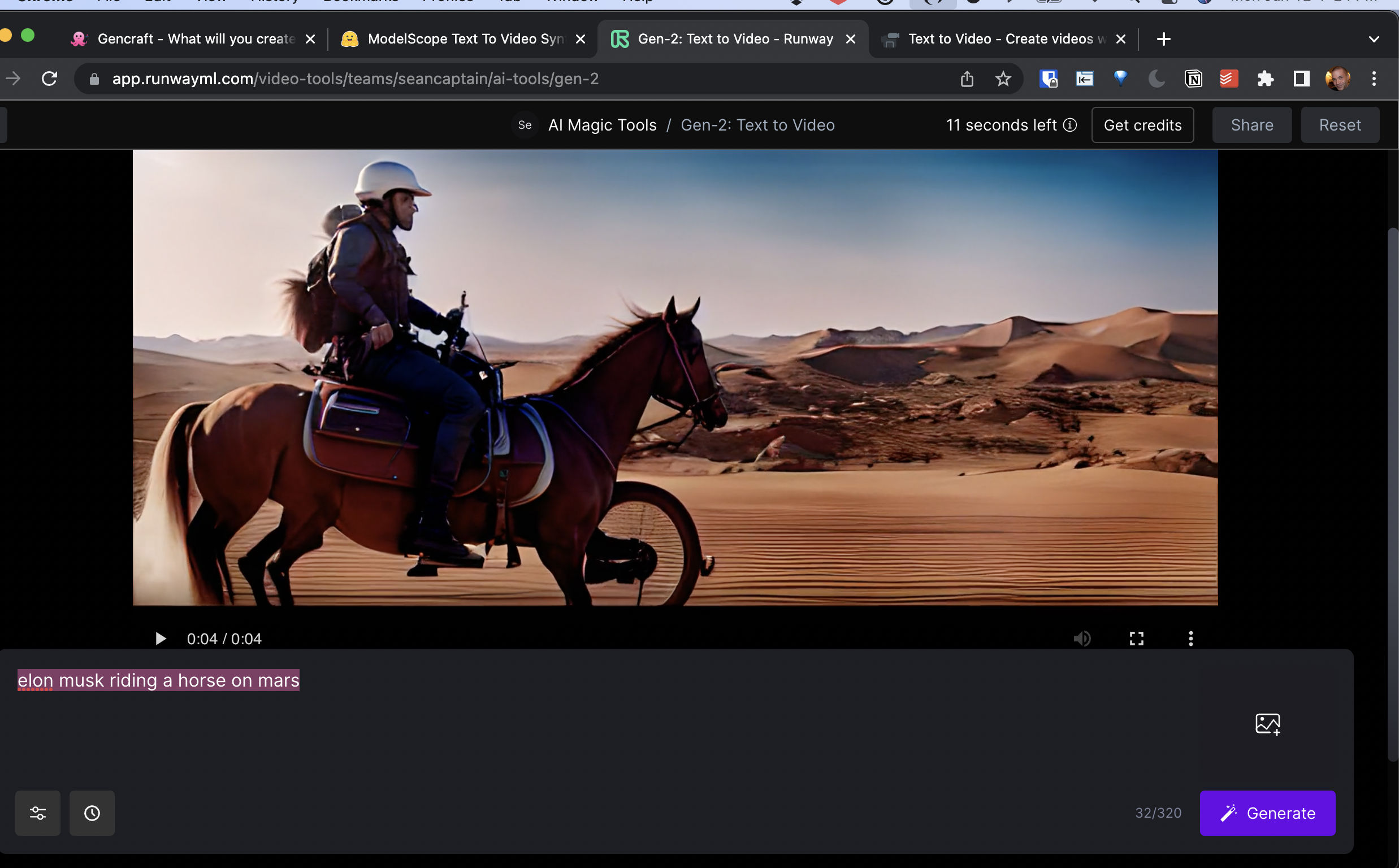

In fact, videos from rivals were almost always far more animated than Runway’s creations, which were more atmospheric than action-packed. The “elon musk riding a horse on mars” prompt was an extreme in stillness, showing what appeared to be a cut-out of the billionaire equestrian (looking nothing like Musk) sliding across a barely moving background–like some high-res South Park clip. Multiple tries did little to help, although one that added an unprompted bicycle wheel to the front of the horse was entertaining.

There’s another kind of action you won’t see on Runway: porn. Checking Vercel’s gallery of recent user creations one day, I saw a string of naked women generated by truly foul-mouthed prompts (although their actions weren’t nearly as explicit as what the users requested). The free ModelScope app also has no apparent sensors. But Runway does. I tried the least-offensive prompt from the Vercel gallery — “cute naked girl” — on Gen-2. It informed me the request seemed to violate the terms of service.

Furthermore, “Repeated violations may lead to suspension of your account.” But the app didn’t charge me for my puerile request.

AI is still inconsistent — and pricey

In other cases, though, you can spend a lot. One clear lesson from all these apps is that today’s AI video is still quite inconsistent. Some pieces come out great on the first try; others fail again and again. Still-image generators like Midjourney (and even Runway’s own image-gen offering) present lower-res previews that you can choose from before paying to download one. With Runway, you have to pay for the flops, too, on your way to getting a better result.

How much does that cost? Gen-2’s pricing is based on a system of credits, with each second of video costing five. After using the introductory 125 free credits, you have to sign up for monthly plans, starting at $15 for 625 credits that expire at the end of the month. (The monthly fee drops to $12 if you sign up for a year.)

You can purchase additional, non-expiring credits starting at $10 for 1000. None of that’s too expensive when videos top out at 4 seconds: You could create 250 of those for ten bucks. But as Runway evolves the ability to create longer movies, making them could get quite expensive at the current pricing.

We’re probably still some distance from that day, though. For now, Runway Gen-2 provides just a tantalizing glimpse of what someday might grace our TV and cinema screens.

More from Tom’s Guide

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.