How to try the Live Captions beta to enhance audio-to-text capability on Apple devices

Accessibility features are centered on improving an interface for a particular disability, but often they can improve experiences for all users. The new Live Captions feature, currently in beta testing on certain devices and countries, is a prime example. Apple aims to turn all audio produced by your device into accurately transcribed legible text, much like Live Text can extract text from bitmapped images.

To enable the feature, you must have an iPhone 11 or later with iOS 16 installed, a relatively recent iPad running iPadOS 16 (see this list), or an Apple silicon (M1 or M2) Mac with macOS Ventura installed. For iPhones and iPads, Apple says Live Captions works only when the device language is set to English (U.S.) or English (Canada). The macOS description more broadly says the beta is “not available in all languages, countries, or regions.”

If you can use Live Captions (or want to check if you can), go to Settings (iOS/iPadOS)/System Settings (Ventura) > Accessibility. If you see a Live Captions (Beta) item, you can use it. Tap or click Live Captions to enable. You can then tap Appearance in iOS/iPadOS or use the top-level menu items in macOS to modify how captions appear. You can separately enable or disable Live Captions in FaceTime to have captions appear in that app.

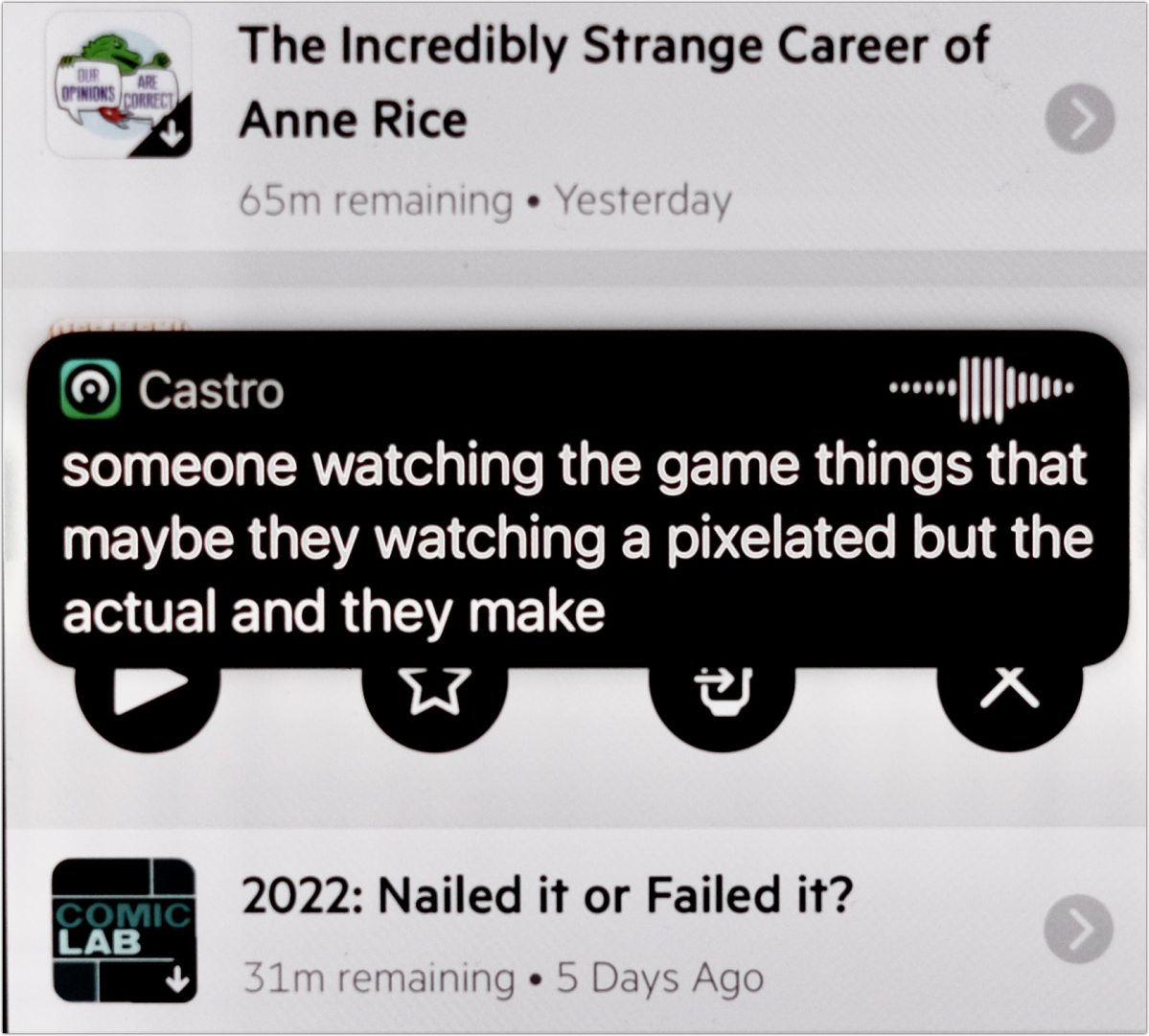

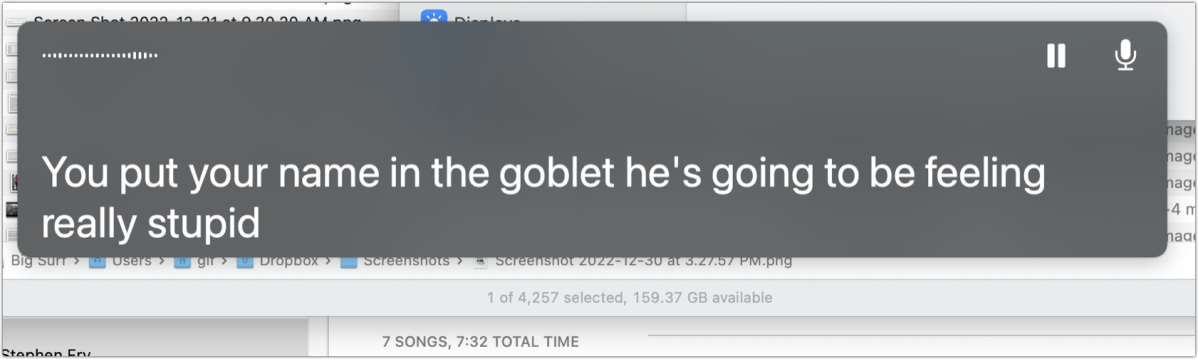

Live Captions appear as an overlay that shows its interpretation of audio in English of any sound produced by your system. A live audio waveform matches the sound Live Captions “hears.” In iOS and iPadOS, you can tap the overlay and access additional controls: minimize, pause, mic, and full screen; in macOS, pause and the mic button are available. If you tap or click the mic button, you can speak and have what you say appear onscreen. This could be handy if you’re trying to show someone the text of what you’re saying.

The text produced in Live Captions is ephemeral: you can’t copy or paste it. It’s also resistant to mobile screen captures: the overlay is apparently generated in such a way that iOS and iPadOS can’t capture it.

Live Captions shows a lot of promise–something to keep an eye on as it improves and expands. I tested Live Captions with podcasts, YouTube, and Instagram audio. It wasn’t as good as some AI-based transcription I’ve seen, as in videoconferencing, but it made a valiant effort, and it was superior to not having captions.

Apple could tie Live Captions into its built-in translation feature, and you might be able to use it to speak in your own language and show a translated version to someone in their tongue, or have live transcriptions of video streams, podcasts, and other audio in a language other than one you speak.

This Mac 911 article is in response to a question submitted by Macworld reader Kevin.

Ask Mac 911

We’ve compiled a list of the questions we get asked most frequently, along with answers and links to columns: read our super FAQ to see if your question is covered. If not, we’re always looking for new problems to solve! Email yours to [email protected], including screen captures as appropriate and whether you want your full name used. Not every question will be answered, we don’t reply to email, and we cannot provide direct troubleshooting advice.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.