City digital twins help train deep learning models to separate building facades

Game engines were originally developed to build imaginary worlds for entertainment. However, these same engines can be used to build copies of real environments, that is, digital twins. Researchers from Osaka University have found a way to use the images that were automatically generated by digital city twins to train deep learning models that can efficiently analyze images of real cities and accurately separate the buildings that appear in them.

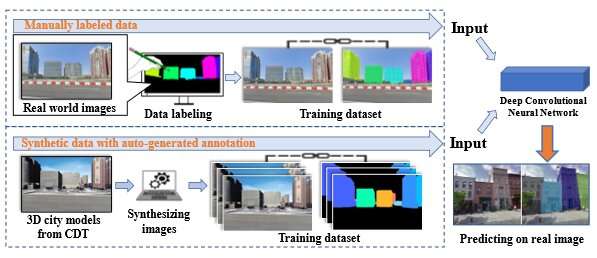

A convolutional neural network is a deep learning neural network designed for processing structured arrays of data such as images. Such advancements in deep learning have fundamentally changed the way tasks, like architectural segmentation, are performed. However, an accurate deep convolutional neural network (DCNN) model needs a large volume of labeled training data and labeling these data can be a slow and extremely expensive manual undertaking.

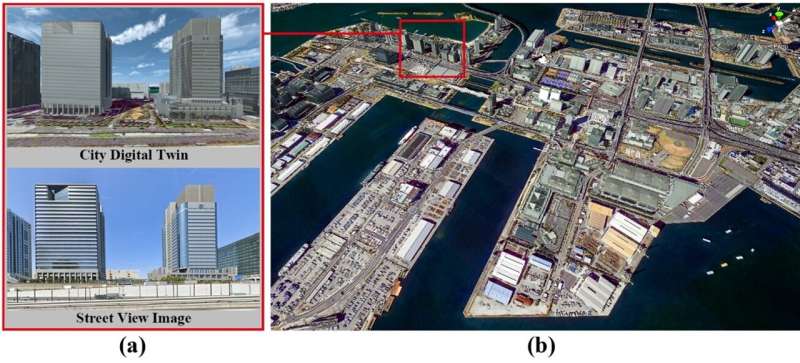

To create the synthetic digital city twin data, the investigators used a 3D city model from the PLATEAU platform, which contains 3D models of most Japanese cities at an extremely high level of detail. They loaded this model into the Unity game engine and created a camera setup on a virtual car, which drove around the city and acquired the virtual data images under various lighting and weather conditions. The Google Maps API was then used to obtain real street-level images of the same study area for the experiments.

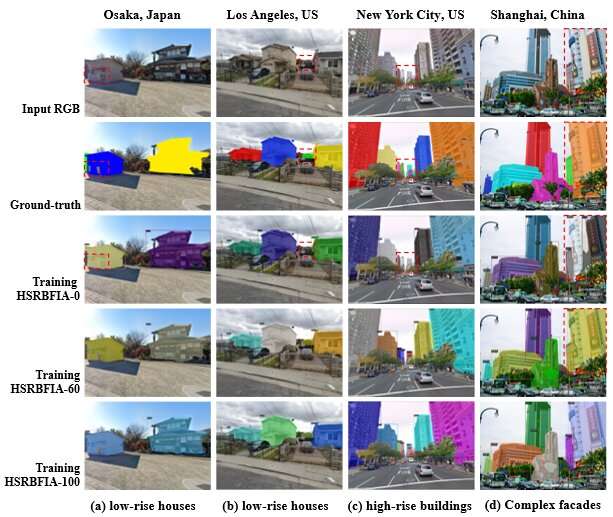

The researchers found that the digital city twin data leads to better results than purely virtual data with no real-world counterpart. Furthermore, adding synthetic data to a real dataset improves segmentation accuracy. However, most importantly, the investigators found that when a certain fraction of real data is included in the digital city twin synthetic dataset, the segmentation accuracy of the DCNN is boosted significantly. In fact, its performance becomes competitive with that of a DCNN trained on 100% real data.

“These results reveal that our proposed synthetic dataset could potentially replace all the real images in the training set,” says Tomohiro Fukuda, the corresponding author of the paper.

Automatically separating out the individual building facades that appear in an image is useful for construction management and architecture design, large-scale measurements for retrofits and energy analysis, and even visualizing building facades that have been demolished. The system was tested on multiple cities, demonstrating the proposed framework’s transferability. The hybrid dataset of real and synthetic data yields promising prediction results for most modern architectural styles. This makes it a promising approach for training DCNNs for architectural segmentation tasks in the future—without the need for costly manual data annotation.

The study is published in the Journal of Computational Design and Engineering.

A weakly supervised machine learning model to extract features from microscopy images

Jiaxin Zhang et al, Automatic generation of synthetic datasets from a city digital twin for use in the instance segmentation of building facades, Journal of Computational Design and Engineering (2022). DOI: 10.1093/jcde/qwac086

Citation:

City digital twins help train deep learning models to separate building facades (2022, September 8)

retrieved 8 September 2022

from https://techxplore.com/news/2022-09-city-digital-twins-deep-facades.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.