A new report from Forrester is cautioning enterprises to be on the lookout for five deepfake scams that can wreak havoc. The deepfake scams are fraud, stock price manipulation, reputation and brand, employee experience and HR, and amplification.

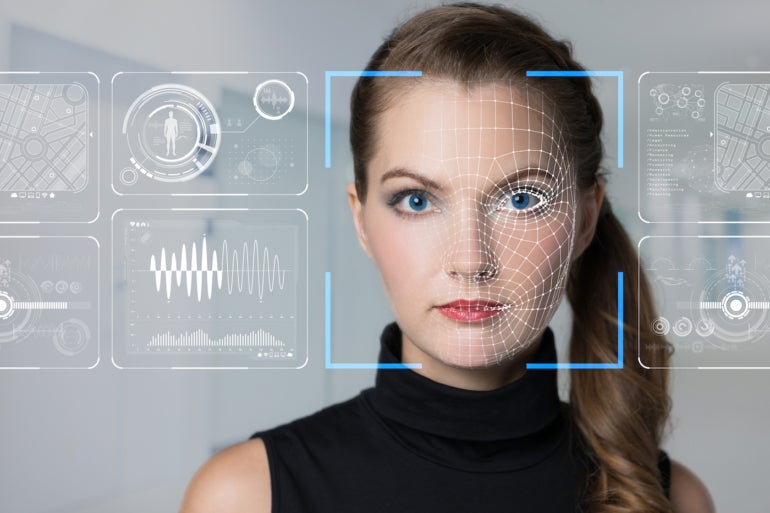

Deepfake is a capability that uses AI technology to create synthetic video and audio content that could be used to impersonate someone, the report’s author, Jeff Pollard, a vice president and principal analyst at Forrester, told TechRepublic.

The difference between deepfake and generative AI is that, with the latter, you type in a prompt to ask a question, and it probabilistically returns an answer, Pollard said. Deepfake “…leverages AI … but it is designed to produce video or audio content as opposed to written answers or responses that a large language model” returns.

Deepfake scams targeting enterprises

These are the five deepfake scams detailed by Forrester.

Fraud

Deepfake technologies can clone faces and voices, and these techniques are used to authenticate and authorize activity, according to Forrester.

“Using deepfake technology to clone and impersonate an individual will lead to fraudulent financial transactions victimizing individuals, but it will also happen in the enterprise,” the report noted.

One example of fraud would be impersonating a senior executive to authorize wire transfers to criminals.

“This scenario already exists today and will increase in frequency soon,” the report cautioned.

Pollard called this the most prevalent type of deepfake “… because it has the shortest path to monetization.”

Stock price manipulation

Newsworthy events can cause stock prices to fluctuate, such as when a well-known executive departs from a publicly traded company. A deepfake of this type of announcement could cause stocks to experience a short price decline, and this could have the ripple effect of impacting employee compensation and the company’s ability to receive financing, the Forrester report said.

Reputation and brand

It’s very easy to create a false social media post of “… a prominent executive using offensive language, insulting customers, blaming partners, and making up information about your products or services,” Pollard said. This scenario creates a nightmare for boards and PR teams, and the report noted that “… it’s all too easy to artificially create this scenario today.”

This could damage the company’s brand, Pollard said, adding that “… it’s, frankly, almost impossible to prevent.”

Employee experience and HR

Another “damning” scenario is when one employee creates a deepfake using nonconsensual pornographic content using the likeness of another employee and circulating it. This can wreak havoc on that employee’s mental health and threaten their career and will “…almost certainly result in litigation,” the report stated.

The motivation is someone thinking it’s funny or looking for revenge, Pollard said. It’s the scam that scares companies the most because it’s “… the most concerning or pernicious long term because it’s the most difficult to prevent,” he said. “It goes against any conventional employee behavior.”

Amplification

Deepfakes can be used to spread other deepfake content. Forrester likened this to bots that disseminate content, “… but instead of giving those bots usernames and post histories, we give them faces and emotions,” the report said. Those deepfakes could also be used to create reactions to an original deepfake that was designed to damage a company’s brand, so it’s potentially seen by a broader audience.

Organizations’ best defenses against deepfakes

Pollard reiterated that you can’t prevent deepfakes, which can be easily created by downloading a podcast, for example, and then cloning a person’s voice to make them say something they didn’t actually say.

“There are step-by-step instructions for anyone to do this (the ability to clone a person’s voice) technically,” he noted. But one of the defenses against this “… is to not say and do awful things.”

Further, if the company has a history of being trustworthy, authentic, dependable and transparent, “… it will be difficult for people to believe all of sudden you’re as awful as a video might make you appear to be,” he said. “But if you have a track record of not caring about privacy, it’s not hard to make a video of an executive…” saying something damaging.

There are tools that offer integrity, verification and traceability to indicate that something isn’t synthetic, Pollard added, such as FakeCatcher from Intel. “It looks at … blood flow in the pixels in the video to figure out what someone’s thinking when this was recorded.”

But Pollard issued a note of pessimism about detection tools, saying they “… evolve and then adversaries get around them and then they have to evolve again. It’s the age-old story with cybersecurity.”

He stressed that deepfakes aren’t going to go away, so organizations need to think proactively about the possibility that they could become a target. Deepfakes will happen, he said.

“Don’t make the first time you’re thinking about this when it happens. You want to rehearse this and understand it so you know exactly what to do when it happens,” he said. “It doesn’t matter if it’s true – it matters if it’s believed enough for me to share it.”

And a final reminder from Pollard: “This is the internet. Everything lives forever.”

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.