4K vs. HDR monitors: How to choose

4K and HDR are the peanut butter and jelly of modern televisions. Where you find one, you’ll find the other. Together, they’re the recipe for a delicious eye-candy sandwich.

Monitors aren’t so lucky. While it’s possible to find 4K HDR monitors, they are fewer in number and expensive. You’ll likely need to choose which feature is more important to you. Here’s how to decide.

Further reading: The best monitors

The basics: What do 4K and HDR mean?

4K is shorthand for a display’s resolution. It typically describes a 16:9 aspect ratio with a resolution of 3,840 x 2,160. Companies occasionally get creative with marketing but, in most cases, will adhere to this definition.

High Dynamic Range describes content that provides a wider range of luminance and color than previously possible. This allows brighter scenes with more contrast and colors. This term does not always refer to a specific standard, though, so it can be a bit fuzzy. Read our complete guide to HDR on your PC if you want to know the details.

If you’re new to display terminology in general, our guide on what to look for in a gaming monitor can also help get you up to speed.

4K vs. HDR for a monitor: What’s more important?

The answer to this question is definitive. 4K is almost always more important than HDR. Most people should heavily favor a 4K display over one that offers HDR if forced to choose between them.

Why? It all has to do with standardization and software support (or the lack of it).

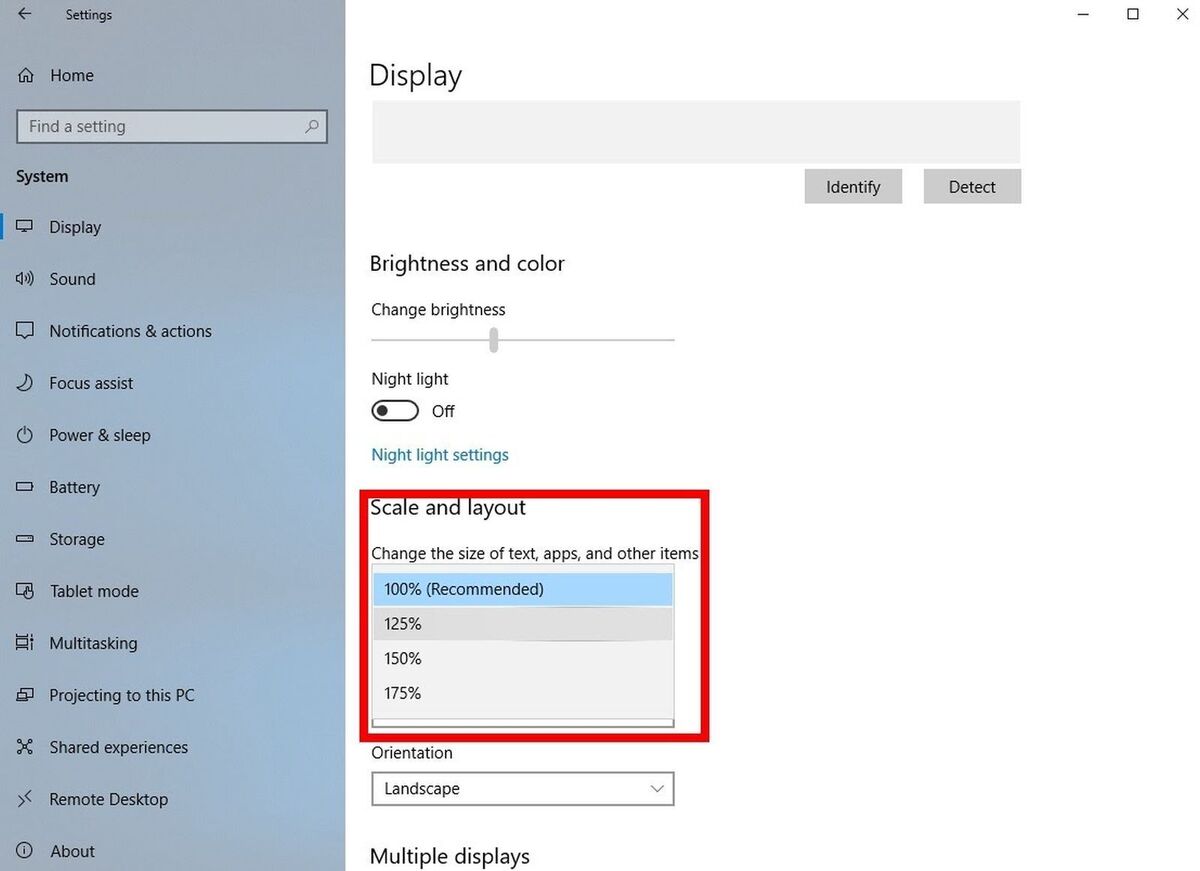

4K is not an especially common resolution even among monitor computer monitors, but it’s nothing new. The first mainstream 4K monitors hit store shelves in 2013. Windows 10 released with good interface scaling support that made 4K resolution easy to use and has received additional updates to improve scaling over time. MacOS also has excellent scaling support for 4K resolution due to Apple’s focus on high pixel density displays.

HDR is less mature. AMD, Intel, and Nvidia moved to support it only in 2016, but Windows didn’t add an HDR toggle until 2017. Windows still can’t automatically detect an HDR monitor and enable the appropriate settings, though that feature is expected to arrive soon. HDR support in monitors is the wild west. Only the VESA DisplayHDR certification (which is entirely optional) offers a hint of standardization.

It’s a similar story with content. 4K content is not universal but it’s generally easy to find. Virtually all games, even those that are several years old, support 4K resolution. Major video streaming services support 4K resolution, too.

HDR support is less common. Only the latest games are likely to embrace it. Many streaming services don’t yet support HDR streaming to a PC—and there’s some set up to do even when it’s possible.

Most HDR monitors suck

HDR has another problem. Most HDR monitors sold today are really, really terrible at HDR.

As mentioned, High Dynamic Range enables a wider range of luminance and color. But you’ll only enjoy the full benefits on a display with a range of brightness, contrast, and color approaching what HDR standards enable. Most computer monitors do well in color, but falter in brightness in contrast.

Different HDR standards have different limits on the maximum brightness they enable, but at minimum you can expect up to 1,000 nits. Dolby Vision HDR can deliver up to 10,000 nits. Yet most computer monitors max out around 400 nits or less.

Monitors also tend to have poor contrast, which limits the difference between the brightness and darkest areas of the image. Few monitors include the Mini-LED or OLED technology found in modern televisions, though they’re become more common (albeit at a fairly steep price).

You can view HDR on any monitor that accepts an HDR signal, no matter its capabilities, but you won’t see its full potential unless the monitor is of exceptionally high quality. A monitor with sub-par HDR will look different from SDR—but it won’t always look better.

Who should care about HDR?

I’ve laid out a damning case against most HDR monitors, and for good reason. HDR doesn’t make sense for most people right now. So, is there any situation where it makes sense to pick HDR over 4K?

There’s two cases where HDR becomes critical. Video creators shooting HDR content may want a great HDR monitor for editing. This will help you understand what viewers with a great HDR display will see.

Bleeding-edge, cost-is-no-obstacle PC gamers should also care about HDR. A great HDR monitor delivers a huge boost in perceived graphics quality. It’s the single greatest improvement you can make to a game’s visuals, and more modern games are embracing HDR.

However, these scenarios also benefit from 4K resolution. It’s hard to imagine a video creator shooting in HDR but not 4K, and gamers who want the absolute best visual quality possible will also crave 4K for its unparalleled sharpness and detail.

Prioritize 4K. It’s the easy choice.

In a sense, computer monitor makers and software developers have made the choice for you. You’d of course want to buy a 4K HDR display if it did both well, but few monitors do, and there’s a lack of content to view.

A quality 4K monitor like the Asus TUF Gaming VG289Q—our favorite budget 4K gaming monitor—can be purchased for well under $400 and is useful no matter how you intend to use it. That makes the 4K vs. HDR debate an easy choice for most people.

Now that you know the pros and cons of each, check out our roundups of the best monitors and the best 4K displays for concrete buying advice. 4K, HDR, gaming, content creation, affordable picks—we’ve got recommendations for every need.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.