Study establishes theory of overparametrization in quantum neural networks

A theoretical proof shows that a technique called overparametrization enhances performance in quantum machine learning for applications that stymie classical computers.

“We believe our results will be useful in using machine learning to learn the properties of quantum data, such as classifying different phases of matter in quantum materials research, which is very difficult on classical computers,” said Diego Garcia-Martin, a postdoctoral researcher at Los Alamos National Laboratory. He is a co-author of a new paper by a Los Alamos team on the technique published in Nature Computational Science.

Garcia-Martin worked on the research in the Laboratory’s Quantum Computing Summer School in 2021 as a graduate student from Autonomous University of Madrid.

Machine learning, or artificial intelligence, usually involves training neural networks to process information—data—and learn how to solve a given task. In a nutshell, one can think of the neural network as a box with knobs, or parameters, that takes data as input and produces an output that depends on the configuration of the knobs.

“During the training phase, the algorithm updates these parameters as it learns, trying to find their optimal setting,” Garcia-Martin said. “Once the optimal parameters are determined, the neural network should be able to extrapolate what it learned from the training instances to new and previously unseen data points.”

Both classical and quantum AI share a challenge when training the parameters, as the algorithm can reach a sub-optimal configuration in its training and stall out.

A leap in performance

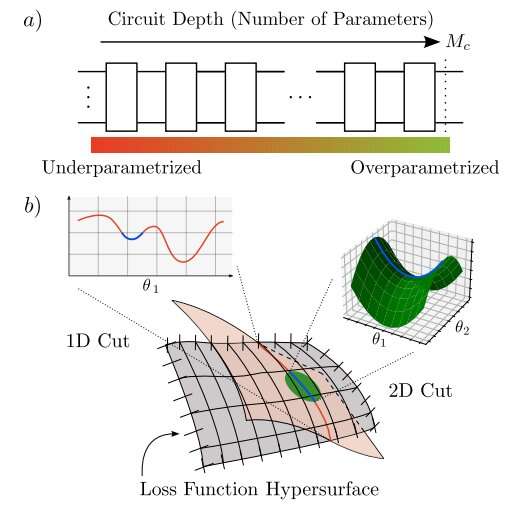

Overparametrization, a well-known concept in classical machine learning that adds more and more parameters, can prevent that stall-out.

The implications of overparametrization in quantum machine learning models were poorly understood until now. In the new paper, the Los Alamos team establishes a theoretical framework for predicting the critical number of parameters at which a quantum machine learning model becomes overparametrized. At a certain critical point, adding parameters prompts a leap in network performance and the model becomes significantly easier to train.

“By establishing the theory that underpins overparametrization in quantum neural networks, our research paves the way for optimizing the training process and achieving enhanced performance in practical quantum applications,” explained Martin Larocca, the lead author of the manuscript and postdoctoral researcher at Los Alamos.

By taking advantage of aspects of quantum mechanics such as entanglement and superposition, quantum machine learning offers the promise of much greater speed, or quantum advantage, than machine learning on classical computers.

Avoiding traps in a machine learning landscape

To illustrate the Los Alamos team’s findings, Marco Cerezo, the senior scientist on the paper and a quantum theorist at the Lab, described a thought experiment in which a hiker looking for the tallest mountain in a dark landscape represents the training process. The hiker can step only in certain directions and assesses their progress by measuring altitude using a limited GPS system.

In this analogy, the number of parameters in the model corresponds to the directions available for the hiker to move, Cerezo said. “One parameter allows movement back and forth, two parameters enable lateral movement and so on,” he said. A data landscape would likely have more than three dimensions, unlike our hypothetical hiker’s world.

With too few parameters, the walker can’t thoroughly explore and might mistake a small hill for the tallest mountain or get stuck in a flat region where any step seems futile. However, as the number of parameters increases, the walker can move in more directions in higher dimensions. What initially appeared as a local hill might turn out to be an elevated valley between peaks. With the additional parameters, the hiker avoids getting trapped and finds the true peak, or the solution to the problem.

More information:

Martín Larocca et al, Theory of overparametrization in quantum neural networks, Nature Computational Science (2023). DOI: 10.1038/s43588-023-00467-6. www.nature.com/articles/s43588-023-00467-6. On arXiv: DOI: 10.48550/arxiv.2109.11676

Citation:

Study establishes theory of overparametrization in quantum neural networks (2023, June 26)

retrieved 26 June 2023

from https://techxplore.com/news/2023-06-theory-overparametrization-quantum-neural-networks.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.