A technique that allows legged robots to continuously learn from their environment

Legged robots have numerous advantageous qualities, including the ability to travel long distances and navigate a wide range of land-based environments. So far, however, legged robots have been primarily trained to move in specific environments, rather than to adapt to their surroundings and operate efficiently in a multitude of different settings. A key reason for this is that predicting all the possible environmental conditions that a robot might encounter while it is operating and training it to best respond to these conditions is highly challenging.

Researchers at Berkeley AI Research and UC Berkeley have recently developed a reinforcement-learning-based computational technique that could circumvent this problem by allowing legged robots to actively learn from their surrounding environment and continuously improve their locomotion skills. This technique, presented in a paper pre-published on arXiv, can fine-tune a robot’s locomotion policies in the real world, allowing it to move around more effectively in a variety of environments.

“We can’t pre-train robots in such ways that they will never fail when deployed in the real world,” Laura Smith, one of the researchers who carried out the study, told TechXplore. “So, for robots to be autonomous, they must be able to recover and learn from failures. In this work, we develop a system for performing RL in the real world to enable robots to do just that.”

The reinforcement learning approach devised by Smith and her colleagues builds on a motion imitation framework that researchers at UC Berkeley developed in the past. This framework allows legged robots to easily acquire locomotion skills by observing and imitating the movements of animals.

In addition, the new technique introduced by the researchers utilizes a model-free reinforcement learning algorithm devised by a team at New York University (NYU), dubbed the randomized ensembled double Q-learning (REDQ) algorithm. Essentially, this is computational method that allows computers and robotic systems to continuously learn from prior experience in a very efficient manner.

“First, we pre-trained a model that gives robots locomotion skills, including a recovery controller, in simulation,” Smith explained. “Then, we simply continued to train the robot when it’s deployed in a new environment in the real world, resetting it with a learned controller. Our system relies only on the robot’s onboard sensors, so we were able to train the robot in unstructured, outdoor settings.”

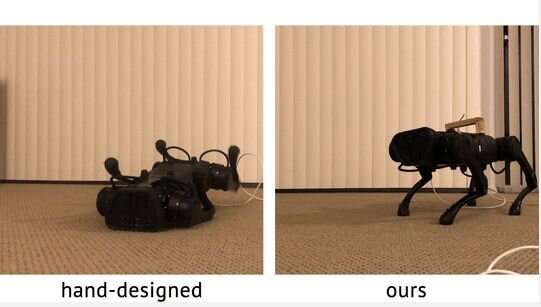

The researchers evaluated their reinforcement learning system in a series of experiments by applying it to a four-legged robot and observing how it learned to move on different terrains and materials, including carpet, lawn, memfoam and doormat. Their findings were highly promising, as their technique allowed the robot to autonomously fine-tune its locomotion strategies as it moved on all the different surfaces.

“We also found that we could treat the recovery controller as another learned locomotion skill and use it to automatically reset the robot in between trials, without requiring an expert to engineer a recovery controller or someone to manually intervene during the learning process,” Smith said.

In the future, the new reinforcement technique developed by this team of researchers could be used to significantly improve the locomotion skills of both existing and newly developed legged robots, allowing them to move on a vast variety of surfaces and terrains. This could in turn facilitate the use of these robots for complex missions that involve traveling for long distances on land, while passing through numerous environments with different characteristics.

“We are now excited to adapt our system into a lifelong learning process, where a robot never stops learning when subjected to the diverse, ever-changing situations it encounters in the real world,” Smith said.

A system to reproduce different animal locomotion skills in robots

Laura Smith et al, Legged robots that keep on learning: Fine-tuning locomotion policies in the real world. arXiv:2110.05457v1 [cs.RO], arxiv.org/abs/2110.05457

Xue Bin Peng et al, Learning agile robotic locomotion skills by imitating animals. arXiv:2004.00784v3 [cs.RO], arxiv.org/abs/2004.00784

Xinyue Chen et al, Randomize ensembled double Q-learning: learning fast without a model. arXiv:2101.05982v2 [cs.LG], arxiv.org/abs/2101.05982

© 2021 Science X Network

Citation:

A technique that allows legged robots to continuously learn from their environment (2021, November 1)

retrieved 1 November 2021

from https://techxplore.com/news/2021-11-technique-legged-robots-environment.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.