Recent advances in the fields of robotics and artificial intelligence (AI) have opened exciting new avenues for teleoperation, the remote control of robots to complete tasks in a distant location. This could, for instance, allow users to visit museums from afar, complete maintenance or technical tasks in spaces that are difficult to access or attend events remotely in more interactive ways.

Most existing teleoperation systems are designed to be deployed in specific settings and using a specific robot. This makes them difficult to apply in different real-world environments, greatly limiting their potential.

Researchers at NVIDIA and UC San Diego recently created AnyTeleop, a computer vision–based teleoperation system that could be applied to a wider range of scenarios. AnyTeleop, introduced in a paper pre-published on arXiv, enables the remote operation of various robotic arms and hands to tackle different manual tasks.

“A primary objective at NVIDIA is researching how humans can teach robots to do tasks,” Dieter Fox, senior director of robotics research at NVIDIA, head of the NVIDIA Robotics Research Lab, professor at the University of Washington Paul G. Allen School of Computer Science & Engineering and head of the UW Robotics and State Estimation Lab, told Tech Xplore.

“Prior work has focused on how a human will teleoperate, or guide, the robot—but this approach has two barriers. First, training a state-of-the-art model requires many demonstrations. Second, set-ups usually feature a costly apparatus or sensory hardware and are designed only for a particular robot or deployment environment,” said Fox.

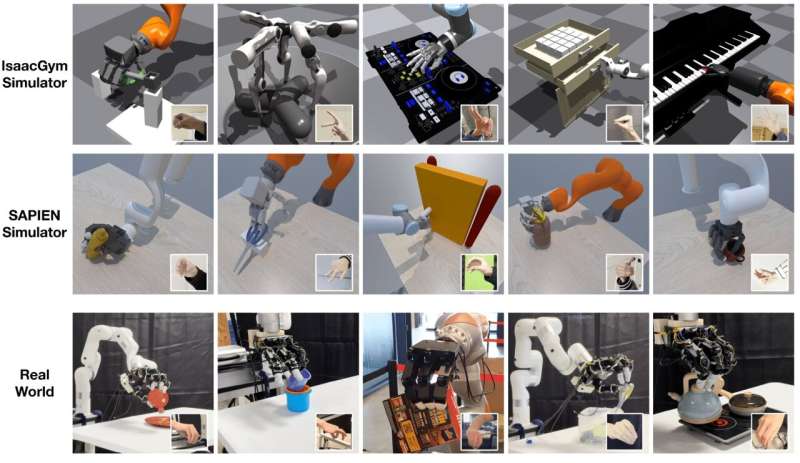

The key goal of the recent work by Fox and his colleagues was to create a teleoperation system that is low-cost, easy to deploy and generalizes well across different tasks, environments and robotic systems. To train their system, the researchers teleoperated both virtual robots in a simulated environments and real robots in a physical environment, as this reduced the need to purchase and assemble many robots.

“AnyTeleop is a vision-based teleoperation system that allows humans to use their hands to control dexterous robotic hand-arm systems,” Fox explained. “The system tracks human hand poses from single or multiple cameras and then retargets them to control the fingers of a multi-fingered robot hand. The wrist point is used to control the robot arm motion with a CUDA-powered motion planner.”

In contrast with most other teleoperation systems introduced in past studies, AnyTeleop can be interfaced with different robot arms, robot hands, camera configurations and different simulated or real-world environments. In addition, it can be applied to both scenarios in which users are nearby and at distant locations.

The AnyTeleop platform can also help to collect human demonstration data (i.e., data representing the movements and actions that humans perform when executing specific manual tasks). This data could in turn be used to better train robots to autonomously complete different tasks.

“The major breakthrough of AnyTeleop is its generalizable and easily deployable design,” Fox said. “One potential application is to deploy virtual environments and virtual robots in the cloud, allowing edge users with entry-level computers and cameras (like an iPhone or PC) to teleoperate them. This could ultimately revolutionize the data pipeline for researchers and industrial developers teaching robots new skills.”

In initial tests, AnyTeleop was found to outperform an existing teleoperation system designed for a specific robot, even when applied to this robot. This highlights its value as a tool for enhancing teleoperation applications.

NVIDIA will soon release an open-source version of the AnyTeleop system, allowing research teams worldwide to test it and apply it to their robots. In the future, this promising new platform could contribute to the scaling up of teleoperation systems, while also facilitating the collection of training data for robotic manipulators.

“We now plan to use the collected data to explore further robot learning,” Fox added. “One notable focus going forward is how to overcome the domain gaps when transferring robot models from simulation to the real world.”

More information:

Yuzhe Qin et al, AnyTeleop: A General Vision-Based Dexterous Robot Arm-Hand Teleoperation System, arXiv (2023). DOI: 10.48550/arxiv.2307.04577

© 2023 Science X Network

Citation:

A computer vision–based teleoperation system that can be applied to different robots (2023, August 1)

retrieved 3 August 2023

from https://techxplore.com/news/2023-08-visionbased-teleoperation-robots.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.