A new model that allows robots to re-identify and follow human users

In recent years, roboticists and computer scientists have introduced various new computational tools that could improve interactions between robots and humans in real-world settings. The overreaching goal of these tools is to make robots more responsive and attuned to the users they are assisting, which could in turn facilitate their widespread adoption.

Researchers at Leonardo Labs and the Italian Institute of Technology (IIT) in Italy recently introduced a new computational framework that allows robots to recognize specific users and follow them around within a given environment. This framework, introduced in a paper published as part of the 2023 IEEE International Conference on Advanced Robotics and Its Social Impacts (ARSO), allows robots re-identify users in their surroundings, while also performing specific actions in response to hand gestures performed by the users.

“We aimed to create a ground-breaking demonstration to attract stakeholders to our laboratories,” Federico Rollo, one of the researchers who carried out the study, told Tech Xplore. “The Person-Following robot is a prevalent application found in many commercial mobile robots, especially in industrial environments or for assisting individuals. Typically, such algorithms use external Bluetooth or Wi-Fi emitters, which can interfere with other sensors and the user is required to carry.”

The key objective of the recent work by Rollo and his colleagues was to create a re-identification model that can recognize specific targets in images recorded by an RGB camera. RGB cameras are among the most used sensors in the field of robotics, thus they are very easy to source and integrate with existing robotic systems.

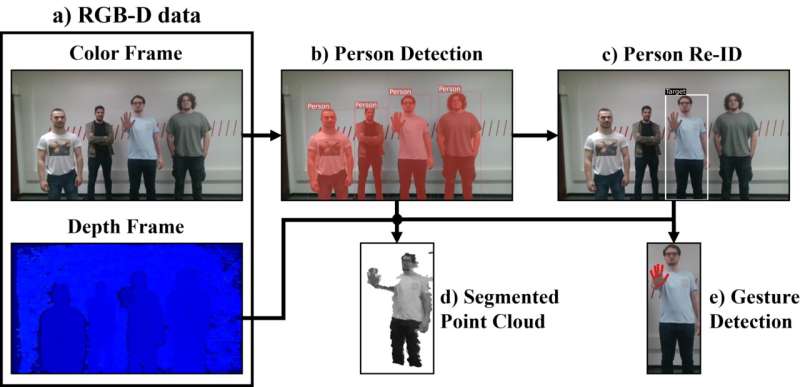

“The re-identification module we developed comprises two consecutive steps: a calibration step and a re-identification step,” Rollo explained.

“During the calibration step, the target person is requested to move randomly in front of the robot. In this phase, the robot utilizes a neural network to detect the person and learn their appearance in the form of network embeddings (think of an abstract vector representing the person’s features). These embeddings are then used to create a statistical model that represents the target.”

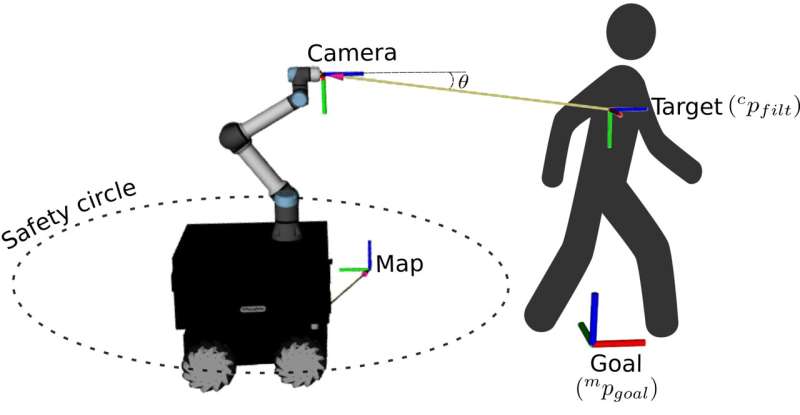

In the second stage of its processing, the module created by the researchers re-identifies targets while they are naturally moving in their surroundings. The framework achieves this by analyzing images acquired by one or more RGB cameras, detecting people in these images, computing their features, and comparing these features with those outlined in a model of the target user created during the calibration phase.

“If certain features statistically match the model, the person with those features is selected as the target,” Rollo said. “This information is then sent to a localization module, which computes the 3D position of the target user and sends velocity commands to the robot to move toward him/her. Additionally, the application includes a gesture detection module.”

The gesture detection model created by Rollo and his colleagues detects specific hand gestures of a target user and sends commands to the robot aligned with these gestures. For instance, if a user places an open hand in front of the robot’s field of view, this triggers the stop command, instructing the robot to stop. Contrarily, if the user presents a closed hand, the robot will start operating again.

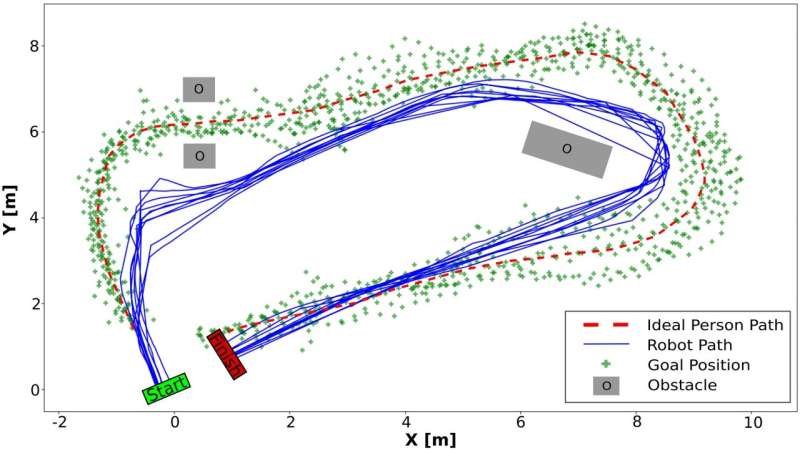

So far, the researchers tested their framework in a series of experiments using the Robotnik RB-Kairos+ robot. This is a mobile robotic manipulator designed to be primarily introduced in industrial environments, such as warehouses and manufacturing sites.

“The re-identification module demonstrated remarkable robustness during testing, even in crowded areas,” Rollo said. “This robust behavior opens up various practical applications. For instance, it could be utilized to move high-load objects in industrial settings, guide a robot to different stations in a collaborative or industrial environment, or assist elderly individuals in relocating their belongings within a home.”

The new re-identification and gesture detection framework developed by this team of researchers could soon be applied and further tested in various real-world scenarios that require mobile robots to follow humans and autonomously transport items. Before it can be deployed on a large scale, however, Rollo and his colleagues plan to overcome some limitations of the model identified during their initial experiments.

“One notable limitation is that the statistical model acquired during the calibration phase remains constant during re-identification,” Rollo added.

“This means that if the target changes its appearance, for instance, by wearing different clothes, the algorithm is unable to adapt and requires recalibration. Additionally, there is an expressed interest in exploring new approaches to adapt the neural network itself to recognize the target, potentially leveraging continual learning methods. This could enhance the statistical match between the target model and the features extracted from RGB images, providing a more adaptive and flexible system.”

More information:

Federico Rollo et al, FollowMe: a Robust Person Following Framework Based on Visual Re-Identification and Gestures, 2023 IEEE International Conference on Advanced Robotics and Its Social Impacts (ARSO) (2023). DOI: 10.1109/ARSO56563.2023.10187536. On arXiv: DOI: 10.48550/arxiv.2311.12992

© 2023 Science X Network

Citation:

A new model that allows robots to re-identify and follow human users (2023, December 11)

retrieved 11 December 2023

from https://techxplore.com/news/2023-12-robots-re-identify-human-users.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.