3 missing Vision Pro features that will play a key role in the future

I haven’t written much about Vision Pro in the month since Apple took the wraps off its headset–er, excuse me, spatial computer. That’s in part because I still haven’t tried it out for myself, but also because I’ve been slowly digesting the staggering amount of technology that the company showed off with its latest device.

In the meantime, there’s been plenty of theorizing about Apple’s ultimate goals with this product category, and whether a truly lightweight augmented reality device is even achievable with our current technology.

As I’ve spent time considering the Vision Pro, I realized that Apple’s story for the device is shaped as much by what it didn’t show us as by what it did. That goes for big categories like fitness or gaming, which didn’t get much time in the Vision Pro announcement, but also for smaller, individual features that already show up in other Apple products but are conspicuously absent from the Vision Pro, though they seem ideally suited to the future of this space.

Two hands and a map

Apple demoed a number of its existing applications that have been ported to the Vision Pro, but the ones it showed off were definitely of a specific bent. Productivity tools like Keynote or Freeform, entertainment apps including Music or TV, communication tools such as FaceTime and Messages, and experiential apps like Photos and Mindfulness.

One tool not present (as far as we can tell) on the Vision Pro’s home screen? Maps.

Maps on the iPhone has a very good AR walking experience.

Dan Moren

This strikes me as…not odd, exactly, for reasons I’ll get into, but interesting. Because Maps is one place where Apple has already developed a really good augmented reality interface. Starting in iOS 15, the company rolled out AR-based walking directions, which let you hold up your iPhone and see giant labels overlaid on a camera view. If you’ve missed it, that’s no surprise: it was first available in only a few cities but has quietly expanded in the last couple of years to more than 80 regions worldwide.

I only ended up trying it out recently, and I was impressed with its utility, but annoyed by having to walk around holding up my iPhone. That made me even more certain that this feature would seem perfectly at home on a pair of augmented reality glasses. But it appears to be nowhere to be found on the Vision Pro.

Lost in translation

Any science-fiction fan has at some point or another coveted Star Trek’s universal translator or The Hitchhiker’s Guide to the Galaxy’s babelfish: the invisible technology that lets you instantly understand and be understood by anybody else. (Not to mention ironing out a lot of pesky plot problems.)

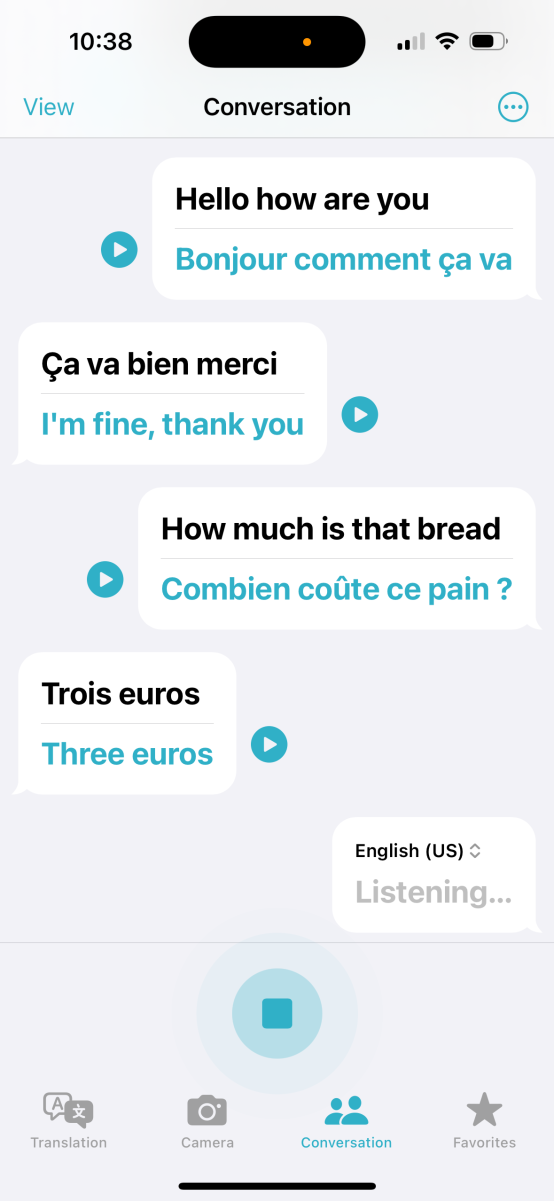

Apple’s been gradually beefing up its own translation technology over the past several years, with the addition of the Translate app and a systemwide translation service. While perhaps not as broad as Google’s own offering, Apple’s translation software has been slowly catching up, even adding a conversation mode that can automatically detect people speaking in multiple languages, translate their words, and play them back in audio.

The iPhone’s Translate app would be an ideal feature for the Vision Pro.

Dan Moren

This is a killer app for travel, and yet another feature that would seem right at home on an augmented reality device. Imagine being able to instantly have a translation spoken into your ear, or displayed on a screen in front of your eyes. Several years ago, I was on an extended stay in India and, when the air conditioner in my now-wife’s apartment broke, I had to call her and have one of her co-workers translate for the repair man, who spoke only Hindi. (To be fair, I did try to use Google’s similar conversation mode at the time, but it ended up being rather cumbersome.)

And it’s not only for conversations either. Apple’s systemwide translation framework already offers the ability to translate text in a photograph and the Translate app itself has a live camera view, meaning that you could even browse through a store while abroad and have items you see around for you translated on the fly.

Don’t look up

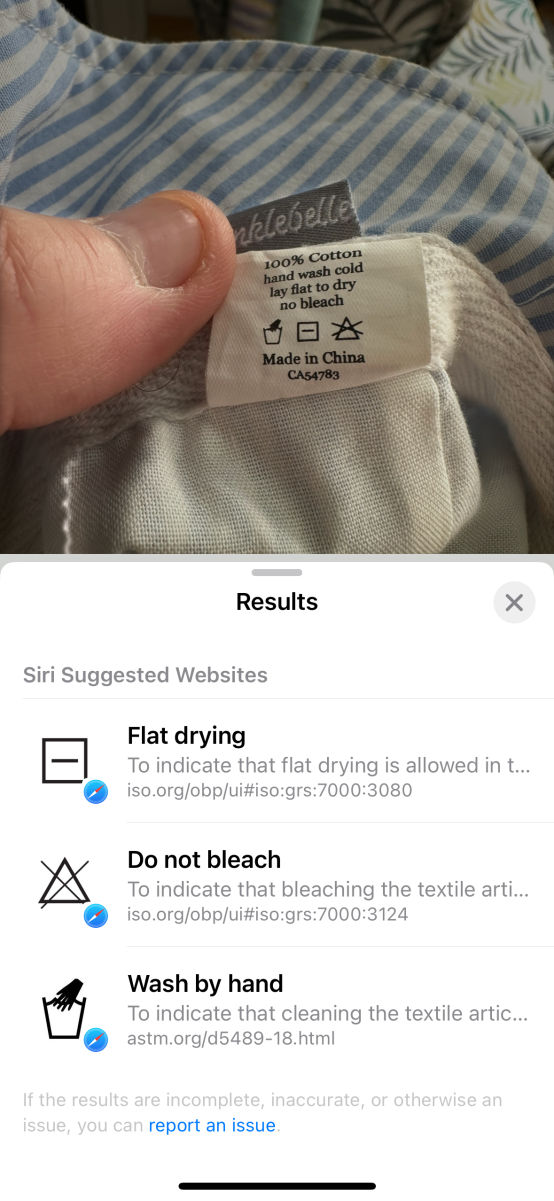

One of the most mind-blowing features that Apple has developed in recent years is Visual Lookup. When first introduced, it leveraged machine learning to identify a few categories of items seen in photos–including flowers, dogs, landmarks–with somewhat mixed results. But in iOS 17, Apple has improved this feature immensely, both in terms of reliability and by adding other categories, such as looking up recipes from a picture of a meal or identifying and interpreting those mystifying laundry symbols.

This, to me, is the real dream of augmented reality. To be able to look at something and instantly pull up information about it is a bit like having an annotated guide to the whole world. Moreover, it’s another feature that meshes perfectly with the idea of a lightweight AR wearable device: rather than looking at, say, a work of art, and then burying your nose in your phone to learn more, you can instead see the details right in front of you, or could even have interesting things overlaid or meshed with the actual artwork itself.

Visual Lookup on a headset

seems like an ideal match.

Dan Moren

Apple didn’t show off any Visual Lookup features in its introduction to Vision Pro, but this is one place where there may be another shoe to drop. MacRumors’s Steve Moser poked around in the Vision Pro software development kit and found notice of a feature called Visual Search, which sounds as though it would do a lot of the same things, getting information from the world around you and augmenting it with additional details.

Vision of the future

On the face of it, the omission of all of these features makes sense with the story Apple told about where and when you’re likely to use the Vision Pro. Mapping, translation, and visual lookup are the kind of features that are most useful when you’re out and about, but the Vision Pro is still fundamentally a device that you go to, not one that you take with you.

Calling the Vision Pro a spatial computer might be a buzz-worthy way of avoiding terms like “headset” or “metaverse,” but it also conveys something intrinsic about the product: it really is the mixed reality analog of a computer—and, even there, almost more of a desktop than a laptop, given that it seems to be something that you mostly use in a fixed position.

But just as laptops and tablets and smartphones evolved the concept of a computer from something you sit at a desk to use to something that you put in a bag or your pocket, it seems obvious that the puck that Apple is skating towards is the Vision Pro’s equivalent of a MacBook or iPhone. Hopefully, it won’t take as long for Apple to get there as it did for the smartphone to evolve from the desktop computer but don’t be surprised if what we ultimately get is just as different from the Vision Pro as the iPhone was from the original Mac.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.